- Professional Development

- Medicine & Nursing

- Arts & Crafts

- Health & Wellbeing

- Personal Development

25 Data Architecture courses delivered Online

Develop Big Data Pipelines with R, Sparklyr & Power BI

By NextGen Learning

Develop Big Data Pipelines with R, Sparklyr & Power BI Course Overview: This course offers a comprehensive exploration of building and managing big data pipelines using R, Sparklyr, and Power BI. Learners will gain valuable insight into the entire process, from setting up and installing the necessary tools to creating effective ETL pipelines, implementing machine learning techniques, and visualising data with Power BI. The course is designed to provide a strong foundation in data engineering, enabling learners to handle large datasets, optimise data workflows, and communicate insights clearly using visual tools. By the end of this course, learners will have the expertise to work with big data, manage ETL pipelines, and use Sparklyr and Power BI to drive data-driven decisions in various professional settings. Course Description: This course delves into the core concepts and techniques for managing big data using R, Sparklyr, and Power BI. It covers a range of topics including the setup and installation of necessary tools, building ETL pipelines with Sparklyr, applying machine learning models to big data, and using Power BI for creating powerful visualisations. Learners will explore how to extract, transform, and load large datasets, and will develop a strong understanding of big data architecture. They will also gain proficiency in visualising complex data and presenting findings effectively. The course is structured to enhance learners' problem-solving abilities and their competence in big data environments, equipping them with the skills needed to manage and interpret vast amounts of information. Develop Big Data Pipelines with R, Sparklyr & Power BI Curriculum: Module 01: Introduction Module 02: Setup and Installations Module 03: Building the Big Data ETL Pipeline with Sparklyr Module 04: Big Data Machine Learning with Sparklyr Module 05: Data Visualisation with Power BI (See full curriculum) Who is this course for? Individuals seeking to understand big data pipelines. Professionals aiming to expand their data engineering skills. Beginners with an interest in data analytics and big data tools. Anyone looking to enhance their ability to analyse and visualise data. Career Path: Data Engineer Data Analyst Data Scientist Business Intelligence Analyst Machine Learning Engineer Big Data Consultant

AWS Certified Cloud Practitioner (CLF-C02) - Ultimate Exam Training

By Packt

This comprehensive course on AWS Certified Cloud Practitioner (CLF-C01) empowers you to fast-track your IT career. Gain in-depth knowledge of cloud computing, AWS services, and architectural concepts. With hands-on labs, quizzes, and real practice exams, you will confidently build cost-effective, fault-tolerant IT solutions on the AWS Cloud.

Register on the SQL NoSQL Big Data and Hadoop today and build the experience, skills and knowledge you need to enhance your professional development and work towards your dream job. Study this course through online learning and take the first steps towards a long-term career. The course consists of a number of easy to digest, in-depth modules, designed to provide you with a detailed, expert level of knowledge. Learn through a mixture of instructional video lessons and online study materials. Receive online tutor support as you study the course, to ensure you are supported every step of the way. Get a digital certificate as a proof of your course completion. The SQL NoSQL Big Data and Hadoop is incredibly great value and allows you to study at your own pace. Access the course modules from any internet-enabled device, including computers, tablet, and smartphones. The course is designed to increase your employability and equip you with everything you need to be a success. Enrol on the now and start learning instantly! What You Get With The SQL NoSQL Big Data and Hadoop Receive a e-certificate upon successful completion of the course Get taught by experienced, professional instructors Study at a time and pace that suits your learning style Get instant feedback on assessments 24/7 help and advice via email or live chat Get full tutor support on weekdays (Monday to Friday) Course Design The course is delivered through our online learning platform, accessible through any internet-connected device. There are no formal deadlines or teaching schedules, meaning you are free to study the course at your own pace. You are taught through a combination of Video lessons Online study materials Certification Upon successful completion of the course, you will be able to obtain your course completion e-certificate free of cost. Print copy by post is also available at an additional cost of £9.99 and PDF Certificate at £4.99. Who Is This Course For: The course is ideal for those who already work in this sector or are an aspiring professional. This course is designed to enhance your expertise and boost your CV. Learn key skills and gain a professional qualification to prove your newly-acquired knowledge. Requirements: The online training is open to all students and has no formal entry requirements. To study the SQL NoSQL Big Data and Hadoop, all your need is a passion for learning, a good understanding of English, numeracy, and IT skills. You must also be over the age of 16. Course Content Section 01: Introduction Introduction 00:07:00 Building a Data-driven Organization - Introduction 00:04:00 Data Engineering 00:06:00 Learning Environment & Course Material 00:04:00 Movielens Dataset 00:03:00 Section 02: Relational Database Systems Introduction to Relational Databases 00:09:00 SQL 00:05:00 Movielens Relational Model 00:15:00 Movielens Relational Model: Normalization vs Denormalization 00:16:00 MySQL 00:05:00 Movielens in MySQL: Database import 00:06:00 OLTP in RDBMS: CRUD Applications 00:17:00 Indexes 00:16:00 Data Warehousing 00:15:00 Analytical Processing 00:17:00 Transaction Logs 00:06:00 Relational Databases - Wrap Up 00:03:00 Section 03: Database Classification Distributed Databases 00:07:00 CAP Theorem 00:10:00 BASE 00:07:00 Other Classifications 00:07:00 Section 04: Key-Value Store Introduction to KV Stores 00:02:00 Redis 00:04:00 Install Redis 00:07:00 Time Complexity of Algorithm 00:05:00 Data Structures in Redis : Key & String 00:20:00 Data Structures in Redis II : Hash & List 00:18:00 Data structures in Redis III : Set & Sorted Set 00:21:00 Data structures in Redis IV : Geo & HyperLogLog 00:11:00 Data structures in Redis V : Pubsub & Transaction 00:08:00 Modelling Movielens in Redis 00:11:00 Redis Example in Application 00:29:00 KV Stores: Wrap Up 00:02:00 Section 05: Document-Oriented Databases Introduction to Document-Oriented Databases 00:05:00 MongoDB 00:04:00 MongoDB Installation 00:02:00 Movielens in MongoDB 00:13:00 Movielens in MongoDB: Normalization vs Denormalization 00:11:00 Movielens in MongoDB: Implementation 00:10:00 CRUD Operations in MongoDB 00:13:00 Indexes 00:16:00 MongoDB Aggregation Query - MapReduce function 00:09:00 MongoDB Aggregation Query - Aggregation Framework 00:16:00 Demo: MySQL vs MongoDB. Modeling with Spark 00:02:00 Document Stores: Wrap Up 00:03:00 Section 06: Search Engines Introduction to Search Engine Stores 00:05:00 Elasticsearch 00:09:00 Basic Terms Concepts and Description 00:13:00 Movielens in Elastisearch 00:12:00 CRUD in Elasticsearch 00:15:00 Search Queries in Elasticsearch 00:23:00 Aggregation Queries in Elasticsearch 00:23:00 The Elastic Stack (ELK) 00:12:00 Use case: UFO Sighting in ElasticSearch 00:29:00 Search Engines: Wrap Up 00:04:00 Section 07: Wide Column Store Introduction to Columnar databases 00:06:00 HBase 00:07:00 HBase Architecture 00:09:00 HBase Installation 00:09:00 Apache Zookeeper 00:06:00 Movielens Data in HBase 00:17:00 Performing CRUD in HBase 00:24:00 SQL on HBase - Apache Phoenix 00:14:00 SQL on HBase - Apache Phoenix - Movielens 00:10:00 Demo : GeoLife GPS Trajectories 00:02:00 Wide Column Store: Wrap Up 00:04:00 Section 08: Time Series Databases Introduction to Time Series 00:09:00 InfluxDB 00:03:00 InfluxDB Installation 00:07:00 InfluxDB Data Model 00:07:00 Data manipulation in InfluxDB 00:17:00 TICK Stack I 00:12:00 TICK Stack II 00:23:00 Time Series Databases: Wrap Up 00:04:00 Section 09: Graph Databases Introduction to Graph Databases 00:05:00 Modelling in Graph 00:14:00 Modelling Movielens as a Graph 00:10:00 Neo4J 00:04:00 Neo4J installation 00:08:00 Cypher 00:12:00 Cypher II 00:19:00 Movielens in Neo4J: Data Import 00:17:00 Movielens in Neo4J: Spring Application 00:12:00 Data Analysis in Graph Databases 00:05:00 Examples of Graph Algorithms in Neo4J 00:18:00 Graph Databases: Wrap Up 00:07:00 Section 10: Hadoop Platform Introduction to Big Data With Apache Hadoop 00:06:00 Big Data Storage in Hadoop (HDFS) 00:16:00 Big Data Processing : YARN 00:11:00 Installation 00:13:00 Data Processing in Hadoop (MapReduce) 00:14:00 Examples in MapReduce 00:25:00 Data Processing in Hadoop (Pig) 00:12:00 Examples in Pig 00:21:00 Data Processing in Hadoop (Spark) 00:23:00 Examples in Spark 00:23:00 Data Analytics with Apache Spark 00:09:00 Data Compression 00:06:00 Data serialization and storage formats 00:20:00 Hadoop: Wrap Up 00:07:00 Section 11: Big Data SQL Engines Introduction Big Data SQL Engines 00:03:00 Apache Hive 00:10:00 Apache Hive : Demonstration 00:20:00 MPP SQL-on-Hadoop: Introduction 00:03:00 Impala 00:06:00 Impala : Demonstration 00:18:00 PrestoDB 00:13:00 PrestoDB : Demonstration 00:14:00 SQL-on-Hadoop: Wrap Up 00:02:00 Section 12: Distributed Commit Log Data Architectures 00:05:00 Introduction to Distributed Commit Logs 00:07:00 Apache Kafka 00:03:00 Confluent Platform Installation 00:10:00 Data Modeling in Kafka I 00:13:00 Data Modeling in Kafka II 00:15:00 Data Generation for Testing 00:09:00 Use case: Toll fee Collection 00:04:00 Stream processing 00:11:00 Stream Processing II with Stream + Connect APIs 00:19:00 Example: Kafka Streams 00:15:00 KSQL : Streaming Processing in SQL 00:04:00 KSQL: Example 00:14:00 Demonstration: NYC Taxi and Fares 00:01:00 Streaming: Wrap Up 00:02:00 Section 13: Summary Database Polyglot 00:04:00 Extending your knowledge 00:08:00 Data Visualization 00:11:00 Building a Data-driven Organization - Conclusion 00:07:00 Conclusion 00:03:00 Resources Resources - SQL NoSQL Big Data And Hadoop 00:00:00

Microsoft Azure Data Lake Storage Service (Gen1 and Gen2)

By Packt

A hands-on course to learn about different tools and scenarios to ingest data into Data Lake. This course includes end-to-end demonstrations to ingest, process, and export data using Databricks and HDInsight, which will help you have a good understanding of Azure Data Lake Services.

Overview This comprehensive course on SQL NoSQL Big Data and Hadoop will deepen your understanding on this topic. After successful completion of this course you can acquire the required skills in this sector. This SQL NoSQL Big Data and Hadoop comes with accredited certification from CPD, which will enhance your CV and make you worthy in the job market. So enrol in this course today to fast track your career ladder. How will I get my certificate? At the end of the course there will be an online written test, which you can take either during or after the course. After successfully completing the test you will be able to order your certificate, these are included in the price. Who is This course for? There is no experience or previous qualifications required for enrolment on this SQL NoSQL Big Data and Hadoop. It is available to all students, of all academic backgrounds. Requirements Our SQL NoSQL Big Data and Hadoop is fully compatible with PC's, Mac's, Laptop, Tablet and Smartphone devices. This course has been designed to be fully compatible with tablets and smartphones so you can access your course on Wi-Fi, 3G or 4G. There is no time limit for completing this course, it can be studied in your own time at your own pace. Career Path Learning this new skill will help you to advance in your career. It will diversify your job options and help you develop new techniques to keep up with the fast-changing world. This skillset will help you to- Open doors of opportunities Increase your adaptability Keep you relevant Boost confidence And much more! Course Curriculum 14 sections • 130 lectures • 22:34:00 total length •Introduction: 00:07:00 •Building a Data-driven Organization - Introduction: 00:04:00 •Data Engineering: 00:06:00 •Learning Environment & Course Material: 00:04:00 •Movielens Dataset: 00:03:00 •Introduction to Relational Databases: 00:09:00 •SQL: 00:05:00 •Movielens Relational Model: 00:15:00 •Movielens Relational Model: Normalization vs Denormalization: 00:16:00 •MySQL: 00:05:00 •Movielens in MySQL: Database import: 00:06:00 •OLTP in RDBMS: CRUD Applications: 00:17:00 •Indexes: 00:16:00 •Data Warehousing: 00:15:00 •Analytical Processing: 00:17:00 •Transaction Logs: 00:06:00 •Relational Databases - Wrap Up: 00:03:00 •Distributed Databases: 00:07:00 •CAP Theorem: 00:10:00 •BASE: 00:07:00 •Other Classifications: 00:07:00 •Introduction to KV Stores: 00:02:00 •Redis: 00:04:00 •Install Redis: 00:07:00 •Time Complexity of Algorithm: 00:05:00 •Data Structures in Redis : Key & String: 00:20:00 •Data Structures in Redis II : Hash & List: 00:18:00 •Data structures in Redis III : Set & Sorted Set: 00:21:00 •Data structures in Redis IV : Geo & HyperLogLog: 00:11:00 •Data structures in Redis V : Pubsub & Transaction: 00:08:00 •Modelling Movielens in Redis: 00:11:00 •Redis Example in Application: 00:29:00 •KV Stores: Wrap Up: 00:02:00 •Introduction to Document-Oriented Databases: 00:05:00 •MongoDB: 00:04:00 •MongoDB Installation: 00:02:00 •Movielens in MongoDB: 00:13:00 •Movielens in MongoDB: Normalization vs Denormalization: 00:11:00 •Movielens in MongoDB: Implementation: 00:10:00 •CRUD Operations in MongoDB: 00:13:00 •Indexes: 00:16:00 •MongoDB Aggregation Query - MapReduce function: 00:09:00 •MongoDB Aggregation Query - Aggregation Framework: 00:16:00 •Demo: MySQL vs MongoDB. Modeling with Spark: 00:02:00 •Document Stores: Wrap Up: 00:03:00 •Introduction to Search Engine Stores: 00:05:00 •Elasticsearch: 00:09:00 •Basic Terms Concepts and Description: 00:13:00 •Movielens in Elastisearch: 00:12:00 •CRUD in Elasticsearch: 00:15:00 •Search Queries in Elasticsearch: 00:23:00 •Aggregation Queries in Elasticsearch: 00:23:00 •The Elastic Stack (ELK): 00:12:00 •Use case: UFO Sighting in ElasticSearch: 00:29:00 •Search Engines: Wrap Up: 00:04:00 •Introduction to Columnar databases: 00:06:00 •HBase: 00:07:00 •HBase Architecture: 00:09:00 •HBase Installation: 00:09:00 •Apache Zookeeper: 00:06:00 •Movielens Data in HBase: 00:17:00 •Performing CRUD in HBase: 00:24:00 •SQL on HBase - Apache Phoenix: 00:14:00 •SQL on HBase - Apache Phoenix - Movielens: 00:10:00 •Demo : GeoLife GPS Trajectories: 00:02:00 •Wide Column Store: Wrap Up: 00:05:00 •Introduction to Time Series: 00:09:00 •InfluxDB: 00:03:00 •InfluxDB Installation: 00:07:00 •InfluxDB Data Model: 00:07:00 •Data manipulation in InfluxDB: 00:17:00 •TICK Stack I: 00:12:00 •TICK Stack II: 00:23:00 •Time Series Databases: Wrap Up: 00:04:00 •Introduction to Graph Databases: 00:05:00 •Modelling in Graph: 00:14:00 •Modelling Movielens as a Graph: 00:10:00 •Neo4J: 00:04:00 •Neo4J installation: 00:08:00 •Cypher: 00:12:00 •Cypher II: 00:19:00 •Movielens in Neo4J: Data Import: 00:17:00 •Movielens in Neo4J: Spring Application: 00:12:00 •Data Analysis in Graph Databases: 00:05:00 •Examples of Graph Algorithms in Neo4J: 00:18:00 •Graph Databases: Wrap Up: 00:07:00 •Introduction to Big Data With Apache Hadoop: 00:06:00 •Big Data Storage in Hadoop (HDFS): 00:16:00 •Big Data Processing : YARN: 00:11:00 •Installation: 00:13:00 •Data Processing in Hadoop (MapReduce): 00:14:00 •Examples in MapReduce: 00:25:00 •Data Processing in Hadoop (Pig): 00:12:00 •Examples in Pig: 00:21:00 •Data Processing in Hadoop (Spark): 00:23:00 •Examples in Spark: 00:23:00 •Data Analytics with Apache Spark: 00:09:00 •Data Compression: 00:06:00 •Data serialization and storage formats: 00:20:00 •Hadoop: Wrap Up: 00:07:00 •Introduction Big Data SQL Engines: 00:03:00 •Apache Hive: 00:10:00 •Apache Hive : Demonstration: 00:20:00 •MPP SQL-on-Hadoop: Introduction: 00:03:00 •Impala: 00:06:00 •Impala : Demonstration: 00:18:00 •PrestoDB: 00:13:00 •PrestoDB : Demonstration: 00:14:00 •SQL-on-Hadoop: Wrap Up: 00:02:00 •Data Architectures: 00:05:00 •Introduction to Distributed Commit Logs: 00:07:00 •Apache Kafka: 00:03:00 •Confluent Platform Installation: 00:10:00 •Data Modeling in Kafka I: 00:13:00 •Data Modeling in Kafka II: 00:15:00 •Data Generation for Testing: 00:09:00 •Use case: Toll fee Collection: 00:04:00 •Stream processing: 00:11:00 •Stream Processing II with Stream + Connect APIs: 00:19:00 •Example: Kafka Streams: 00:15:00 •KSQL : Streaming Processing in SQL: 00:04:00 •KSQL: Example: 00:14:00 •Demonstration: NYC Taxi and Fares: 00:01:00 •Streaming: Wrap Up: 00:02:00 •Database Polyglot: 00:04:00 •Extending your knowledge: 00:08:00 •Data Visualization: 00:11:00 •Building a Data-driven Organization - Conclusion: 00:07:00 •Conclusion: 00:03:00 •Assignment -SQL NoSQL Big Data and Hadoop: 00:00:00

Building Data Analytics Solutions Using Amazon Redshift

By Nexus Human

Duration 1 Days 6 CPD hours This course is intended for This course is intended for data warehouse engineers, data platform engineers, and architects and operators who build and manage data analytics pipelines. Completed either AWS Technical Essentials or Architecting on AWS Completed Building Data Lakes on AWS Overview In this course, you will learn to: Compare the features and benefits of data warehouses, data lakes, and modern data architectures Design and implement a data warehouse analytics solution Identify and apply appropriate techniques, including compression, to optimize data storage Select and deploy appropriate options to ingest, transform, and store data Choose the appropriate instance and node types, clusters, auto scaling, and network topology for a particular business use case Understand how data storage and processing affect the analysis and visualization mechanisms needed to gain actionable business insights Secure data at rest and in transit Monitor analytics workloads to identify and remediate problems Apply cost management best practices In this course, you will build a data analytics solution using Amazon Redshift, a cloud data warehouse service. The course focuses on the data collection, ingestion, cataloging, storage, and processing components of the analytics pipeline. You will learn to integrate Amazon Redshift with a data lake to support both analytics and machine learning workloads. You will also learn to apply security, performance, and cost management best practices to the operation of Amazon Redshift. Module A: Overview of Data Analytics and the Data Pipeline Data analytics use cases Using the data pipeline for analytics Module 1: Using Amazon Redshift in the Data Analytics Pipeline Why Amazon Redshift for data warehousing? Overview of Amazon Redshift Module 2: Introduction to Amazon Redshift Amazon Redshift architecture Interactive Demo 1: Touring the Amazon Redshift console Amazon Redshift features Practice Lab 1: Load and query data in an Amazon Redshift cluster Module 3: Ingestion and Storage Ingestion Interactive Demo 2: Connecting your Amazon Redshift cluster using a Jupyter notebook with Data API Data distribution and storage Interactive Demo 3: Analyzing semi-structured data using the SUPER data type Querying data in Amazon Redshift Practice Lab 2: Data analytics using Amazon Redshift Spectrum Module 4: Processing and Optimizing Data Data transformation Advanced querying Practice Lab 3: Data transformation and querying in Amazon Redshift Resource management Interactive Demo 4: Applying mixed workload management on Amazon Redshift Automation and optimization Interactive demo 5: Amazon Redshift cluster resizing from the dc2.large to ra3.xlplus cluster Module 5: Security and Monitoring of Amazon Redshift Clusters Securing the Amazon Redshift cluster Monitoring and troubleshooting Amazon Redshift clusters Module 6: Designing Data Warehouse Analytics Solutions Data warehouse use case review Activity: Designing a data warehouse analytics workflow Module B: Developing Modern Data Architectures on AWS Modern data architectures

Building Batch Data Analytics Solutions on AWS

By Nexus Human

Duration 1 Days 6 CPD hours This course is intended for This course is intended for: Data platform engineers Architects and operators who build and manage data analytics pipelines Overview In this course, you will learn to: Compare the features and benefits of data warehouses, data lakes, and modern data architectures Design and implement a batch data analytics solution Identify and apply appropriate techniques, including compression, to optimize data storage Select and deploy appropriate options to ingest, transform, and store data Choose the appropriate instance and node types, clusters, auto scaling, and network topology for a particular business use case Understand how data storage and processing affect the analysis and visualization mechanisms needed to gain actionable business insights Secure data at rest and in transit Monitor analytics workloads to identify and remediate problems Apply cost management best practices In this course, you will learn to build batch data analytics solutions using Amazon EMR, an enterprise-grade Apache Spark and Apache Hadoop managed service. You will learn how Amazon EMR integrates with open-source projects such as Apache Hive, Hue, and HBase, and with AWS services such as AWS Glue and AWS Lake Formation. The course addresses data collection, ingestion, cataloging, storage, and processing components in the context of Spark and Hadoop. You will learn to use EMR Notebooks to support both analytics and machine learning workloads. You will also learn to apply security, performance, and cost management best practices to the operation of Amazon EMR. Module A: Overview of Data Analytics and the Data Pipeline Data analytics use cases Using the data pipeline for analytics Module 1: Introduction to Amazon EMR Using Amazon EMR in analytics solutions Amazon EMR cluster architecture Interactive Demo 1: Launching an Amazon EMR cluster Cost management strategies Module 2: Data Analytics Pipeline Using Amazon EMR: Ingestion and Storage Storage optimization with Amazon EMR Data ingestion techniques Module 3: High-Performance Batch Data Analytics Using Apache Spark on Amazon EMR Apache Spark on Amazon EMR use cases Why Apache Spark on Amazon EMR Spark concepts Interactive Demo 2: Connect to an EMR cluster and perform Scala commands using the Spark shell Transformation, processing, and analytics Using notebooks with Amazon EMR Practice Lab 1: Low-latency data analytics using Apache Spark on Amazon EMR Module 4: Processing and Analyzing Batch Data with Amazon EMR and Apache Hive Using Amazon EMR with Hive to process batch data Transformation, processing, and analytics Practice Lab 2: Batch data processing using Amazon EMR with Hive Introduction to Apache HBase on Amazon EMR Module 5: Serverless Data Processing Serverless data processing, transformation, and analytics Using AWS Glue with Amazon EMR workloads Practice Lab 3: Orchestrate data processing in Spark using AWS Step Functions Module 6: Security and Monitoring of Amazon EMR Clusters Securing EMR clusters Interactive Demo 3: Client-side encryption with EMRFS Monitoring and troubleshooting Amazon EMR clusters Demo: Reviewing Apache Spark cluster history Module 7: Designing Batch Data Analytics Solutions Batch data analytics use cases Activity: Designing a batch data analytics workflow Module B: Developing Modern Data Architectures on AWS Modern data architectures

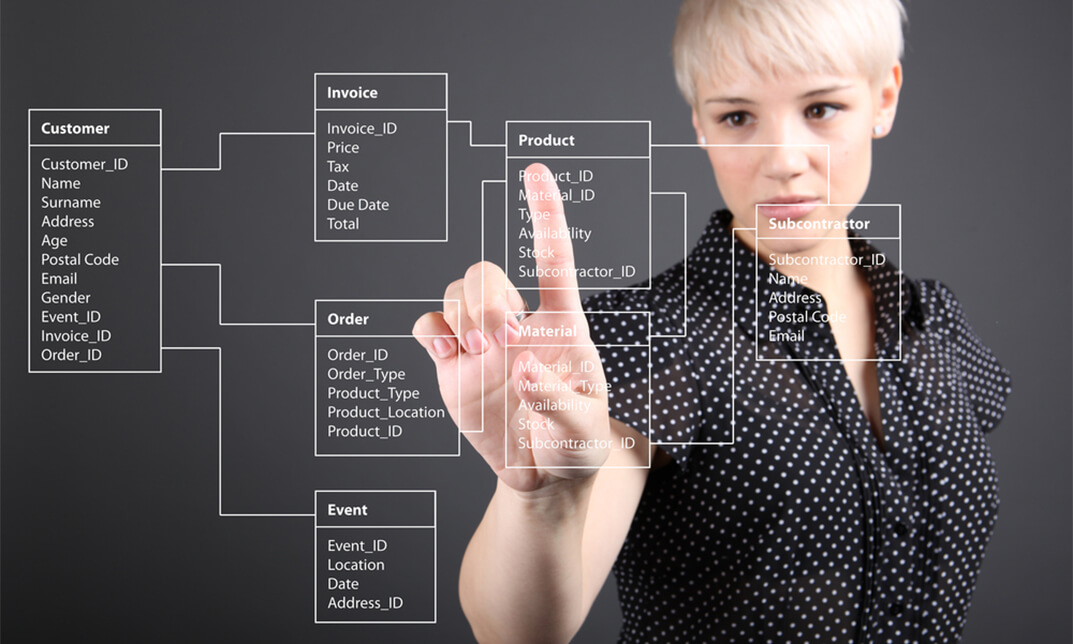

Introduction to Database Design

By iStudy UK

This Introduction to Database Design course teaches the basis of relational database design and explains how to make a good database design and become an expert on it. Designing a database is quite simple, but you've to understand a few rules before jump onto it. It is essential to know about these rules, otherwise, you will tend to make errors. If you find it hard to deal with database, scripts and all the technical parts, then this comprehensive course is just for you. The course includes the followings: Creating database and database users Introduction of data, different data types, foreign key constraints, and other relevant concepts used to create the best database Importing database tables Explore all types of relationships Designing all types of relationships within a database. Learn three common forms of database normalisation Application of database for the business purpose And much more... What Will I Learn? What is a database Understand different types of databases Understand normalization Assign relationships Eliminate repetition Relate tables with IDs Design rules Requirements Basic Microsoft Windows training or equivalent experience Who is the target audience? Students just getting started with designing databases and those who have been designing databases but looking for tips on more effective design Introduction Introduction FREE 00:03:00 Database Basics Overview 00:01:00 What is a Database? 00:03:00 Different Types of Databases 00:12:00 The Process of Database Design 00:08:00 Normalizing Overview - Normalizing 00:01:00 What is Normalization? 00:02:00 Basic Steps to Normalization 00:05:00 A. Brainstorm 00:01:00 B. Organize 00:03:00 C. Eliminate Repetition 00:12:00 D. Assign Relationships 00:01:00 D1. One to One Relationship 00:06:00 D2. One to Many Relationship 00:04:00 D3. Many to Many Relationship 00:06:00 Relating Tables with IDs 00:02:00 Examples of Bad Design 00:15:00 Examples of Good Design 00:06:00 Design Rules 00:05:00 Conclusion Conclusion 00:04:00 Course Certification

Working with Apache Kafka (for Developers) (TTDS6760)

By Nexus Human

Duration 2 Days 12 CPD hours This course is intended for This in an Introductory and beyond level course is geared for experienced Java developers seeking to be proficient in Apache Kafka. Attendees should be experienced developers who are comfortable with Java, and have reasonable experience working with databases. Overview Working in a hands-on learning environment, students will explore Overview of Streaming technologies Kafka concepts and architecture Programming using Kafka API Kafka Streams Monitoring Kafka Tuning / Troubleshooting Kafka Apache Kafka is a real-time data pipeline processor. It high-scalability, fault tolerance, execution speed, and fluid integrations are some of the key hallmarks that make it an integral part of many Enterprise Data architectures. In this lab intensive two day course, students will learn how to use Kafka to build streaming solutions. Introduction to Streaming Systems Fast data Streaming architecture Lambda architecture Message queues Streaming processors Introduction to Kafka Architecture Comparing Kafka with other queue systems (JMS / MQ) Kaka concepts : Messages, Topics, Partitions, Brokers, Producers, commit logs Kafka & Zookeeper Producing messages Consuming messages (Consumers, Consumer Groups) Message retention Scaling Kafka Programming With Kafka Configuration parameters Producer API (Sending messages to Kafka) Consumer API (consuming messages from Kafka) Commits , Offsets, Seeking Schema with Avro Kafka Streams Streams overview and architecture Streams use cases and comparison with other platforms Learning Kafka Streaming concepts (KStream, KTable, KStore) KStreaming operations (transformations, filters, joins, aggregations) Administering Kafka Hardware / Software requirements Deploying Kafka Configuration of brokers / topics / partitions / producers / consumers Security: How secure Kafka cluster, and secure client communications (SASL, Kerberos) Monitoring : monitoring tools Capacity Planning : estimating usage and demand Trouble shooting : failure scenarios and recovery Monitoring and Instrumenting Kafka Monitoring Kafka Instrumenting with Metrics library Instrument Kafka applications and monitor their performance

MySql Masterclass

By IOMH - Institute of Mental Health

Overview of MySql Masterclass The digital world has changed how businesses work in the UK, and database management is now a key part of every successful company. MySQL is one of the most popular tools for this job, used by over 40% of websites around the world. In the UK, big names like BBC, Sky, and many tech startups in London’s Silicon Roundabout rely on MySQL. The MySql Masterclass is designed to help people learn the skills needed to build a strong career in this growing field. Database experts in the UK earn an average salary of £45,000 each year, making it a smart career choice. The MySql Masterclass has 41 helpful modules that start with the basics and move up to more advanced topics. Students will learn how to create databases, write MySQL commands, use joins, and manage stored procedures. The course also teaches how to make systems run faster and safer with performance and security tools. Other important topics include handling JSON data, using full-text search, and working with replication. These are all things that modern companies look for in a database specialist. This MySql Masterclass is made for beginners and gives them the knowledge they need to handle real business databases. It helps students build strong skills in design, optimisation, and administration. The UK’s tech industry is worth £150 billion a year, and the MySql Masterclass helps learners get ready for job opportunities in many areas like retail, health, and finance. Learning Outcomes By the end of the MySql Masterclass, learners will be able to: Build and manage MySQL databases from the ground up Use SELECT, INSERT, UPDATE, and DELETE commands with confidence Work with advanced joins, unions, and stored procedures Design and use indexes, views, and full-text search for better performance Set up MySQL replication, backups, and basic system administration Handle JSON data and manage time zone settings within databases Who is this course for? Aspiring Database Administrators who want full MySQL training to manage databases, user access, and backups in organisations needing strong data systems. Software Developers who want to improve their backend development by learning MySQL queries, stored procedures, and database performance skills. Data Analysts who need advanced SQL to pull useful data from complex tables using joins, group functions, and reporting tools. IT Professionals looking to build database knowledge, including how to configure, tune, and fix issues in MySQL systems. Career Changers with little or no tech background who want to start in database roles, learning from the basics to advanced MySQL tasks. Process of Evaluation After studying the MySql Masterclass Course, your skills and knowledge will be tested with an MCQ exam or assignment. You have to get a score of 60% to pass the test and get your certificate. Certificate of Achievement Certificate of Completion - Digital / PDF Certificate After completing the MySql Masterclass Course, you can order your CPD Accredited Digital / PDF Certificate for £5.99. (Each) Certificate of Completion - Hard copy Certificate You can get the CPD Accredited Hard Copy Certificate for £12.99. (Each) Shipping Charges: Inside the UK: £3.99 International: £10.99 Requirements You don’t need any educational qualification or experience to enrol in the MySql Masterclass course. Career Path Completing this MySql Masterclass course could lead to rewarding jobs like: Database Administrator – £35K to £65K per year MySQL Developer – £30K to £55K per year Data Analyst – £25K to £45K per year Backend Developer – £35K to £60K per year Database Consultant – £40K to £70K per year Course Curriculum: MySql Masterclass Module 1: Introduction on MySQL 01:00:00 Module 2: Data Types 00:51:00 Module 3: SELECT Statements 00:59:00 Module 4: Backticks 00:15:00 Module 5: NULL 00:18:00 Module 6: Limit and Offset 00:13:00 Module 7: Creating databases 00:18:00 Module 8: Using Variables 00:25:00 Module 9: Comment MySQL 00:14:00 Module 10: INSERT Statements 00:29:00 Module 11: DELETE Statements 00:21:00 Module 12: UPDATE Statements 00:20:00 Module 13: ORDER BY Clause 00:08:00 Module 14: Group By 00:18:00 Module 15: Errors in MySQL 00:10:00 Module 16: Joins 00:37:00 Module 17: Joins continued 00:11:00 Module 18: UNION 00:18:00 Module 19: Arithmetic 00:20:00 Module 20: String operations 00:33:00 Module 21: Date and Time Operations 00:08:00 Module 22: Handling Time Zones 00:07:00 Module 23: Regular Expressions 00:19:00 Module 24: VIEWS 00:20:00 Module 25: Table Creation 00:23:00 Module 26: ALTER TABLE 00:23:00 Module 27: Drop Table 00:05:00 Module 28: MySQL LOCK TABLE 00:10:00 Module 29: Error codes 00:08:00 Module 30: Stored routines (procedures and functions) 00:29:00 Module 31: Indexes and Keys 00:24:00 Module 32: Full-Text search 00:18:00 Module 33: PREPARE Statements 00:09:00 Module 34: JSON 00:11:00 Module 35: Extract values from JSON type 00:05:00 Module 36: MySQL Admin 00:08:00 Module 37: TRIGGERS 00:12:00 Module 38: Configuration and tuning 00:07:00 Module 39: Events 00:08:00 Module 40: ENUM 00:09:00 Module 41: Collations, Transactions, Log files, Replication, Backup 00:41:00