- Professional Development

- Medicine & Nursing

- Arts & Crafts

- Health & Wellbeing

- Personal Development

595 Courses

Deep Learning Projects - Convolutional Neural Network Course

By One Education

Ever wondered how machines recognise faces, detect traffic signs, or even tag photos with uncanny accuracy? This course dives straight into the heart of Convolutional Neural Networks (CNNs) – the very engine behind image recognition and deep learning breakthroughs. With a clear focus on project-based learning, you’ll explore how CNNs work, how they’re built, and how they’re trained to see and interpret the world digitally. The content flows logically and stays rooted in clarity, making even the most complex architectures feel almost polite. This is not just a sequence of slides and jargon. It’s a well-structured digital journey tailored for learners who want to confidently grasp how deep learning models behave and evolve. Whether you're brushing up on your neural network knowledge or aiming to reinforce your AI expertise, the course serves up algorithms, code walkthroughs and layered insights with a tone that’s informative, direct, and occasionally dry-witted. If you fancy turning raw data into pixel-level predictions using nothing but code, logic, and neural layers — you’re exactly where you need to be. Learning Outcomes: Gain a solid understanding of convolutional neural networks and their applications in deep learning. Learn how to install the necessary packages and set up a dataset structure for deep learning projects. Discover how to create your own convolutional neural network model and layers using Python. Understand how to preprocess and augment data for advanced image recognition tasks. Learn how to evaluate the accuracy of your models and understand the different models available for deep learning projects. The Deep Learning Projects - Convolutional Neural Network course is designed to provide you with the skills and knowledge you need to build your own advanced deep learning projects. Using Python, you'll learn how to install the necessary packages, set up a dataset structure, and create your own convolutional neural network model and layers. You'll also learn how to preprocess and augment data to enhance the accuracy of your models and evaluate the performance of your models using data generators. Deep Learning Projects - Convolutional Neural Network Course Curriculum Section 01: Introduction Section 02: Installations Section 03: Getting Started Section 04: Accuracy How is the course assessed? Upon completing an online module, you will immediately be given access to a specifically crafted MCQ test. For each test, the pass mark will be set to 60%. Exam & Retakes: It is to inform our learners that the initial exam for this online course is provided at no additional cost. In the event of needing a retake, a nominal fee of £9.99 will be applicable. Certification Upon successful completion of the assessment procedure, learners can obtain their certification by placing an order and remitting a fee of __ GBP. £9 for PDF Certificate and £15 for the Hardcopy Certificate within the UK ( An additional £10 postal charge will be applicable for international delivery). CPD 10 CPD hours / points Accredited by CPD Quality Standards Who is this course for? Data analysts who want to expand their skills in deep learning and convolutional neural networks. Programmers who want to learn how to build advanced models for image recognition. Entrepreneurs who want to develop their own deep learning-based applications for image recognition. Students who want to enhance their skills in deep learning and prepare for a career in the field. Anyone who wants to explore the world of convolutional neural networks and deep learning projects. Career path Data Analyst: £24,000 - £45,000 Machine Learning Engineer: £28,000 - £65,000 Computer Vision Engineer: £30,000 - £70,000 Technical Lead: £40,000 - £90,000 Chief Technology Officer: £90,000 - £250,000 Certificates Certificate of completion Digital certificate - £9 You can apply for a CPD Accredited PDF Certificate at the cost of £9. Certificate of completion Hard copy certificate - £15 Hard copy can be sent to you via post at the expense of £15.

Sale Ends Today IT Skills for Business Level 3 Admission Gifts FREE PDF & Hard Copy Certificate| PDF Transcripts| FREE Student ID| Assessment| Lifetime Access| Enrolment Letter IT skills are not just beneficial today, rather they have become indispensable for any business environment. With over 1.46 million people working within the digi-tech sector in the UK, the demand for advanced IT competencies continues to surge. The IT Skills for Business Level 3 course bundle is designed to place you at the forefront of this dynamic field. So, get ready to elevate your professional capability and become an invaluable asset to any business! The IT Skills for Business Level 3 course offers an extensive curriculum tailored to enhance your technical skills across a broad spectrum of IT disciplines. From foundational courses like IT Support Technician and Functional Skills IT to specialised training in Ethical Hacking, Cyber Security, and Network Security, this bundle equips you with the necessary tools to secure, analyse, and manage IT infrastructure effectively. Additionally, enhance your strategic acumen with Business Analysis, learning to translate business needs into IT solutions, and exploring Financial Analysis for richer business decision-making. Courses Are Included In this IT Skills for Business Level 3 Career Bundle: Course 01: IT Support Technician Course 02: Functional Skills IT Course 03: Building Your Own Computer Course Course 04: It: Ethical Hacking, IT Security and IT Cyber Attacking Course 05: Computer Operating System and Troubleshooting Course 06: Cyber Security Incident Handling and Incident Response Course 07: Cyber Security Law Course 08: Network Security and Risk Management Course 09: CompTIA Network Course 10: CompTIA Cloud+ (CV0-002) Course 11: Web Application Penetration Testing Course Course 12: Learn Ethical Hacking From A-Z: Beginner To Expert Course 13: C# (C-Sharp) Course 14: JavaScript Fundamentals Course 15: Python Programming Bible Course 16: Data Protection (GDPR) Practitioner Course 17: Microsoft SQL Server Development for Everyone! Course 18: SQL Database Administrator Course 19: Data Science and Visualisation with Machine Learning Course 20: SQL For Data Analytics & Database Development Course 21: Introduction to Business Analysis Course 22: Business Data Analysis Course 23: Financial Analysis for Finance Reports Course 24: Financial Modelling Using Excel Course 25: Data analytics with Excel Course 26: Excel Data Tools and Data Management Course 27: Ultimate Microsoft Excel For Business Bootcamp Course 28: MS Word Essentials - The Complete Word Course - Level 3 Course 29: Document Control Course 30: Information Management Don't miss the opportunity to transform your career with cutting-edge IT skills that are crucial in today's tech-driven world. Utilise the industry relevant and essential IT knowledge this IT Skills for Business Level 3 course bundle has to offer and use it as your gateway to success. Enrol today and take the first step towards securing a prominent role in the booming tech industry! Learning Outcomes of this Bundle: Master key IT support skills and build your own computer. Gain proficiency in programming with C#, JavaScript, and Python. Develop expertise in cyber security, network security, and risk management. Learn comprehensive data protection practices including GDPR compliance. Enhance skills in SQL for effective database management and business analytics. Apply advanced Excel techniques for financial modelling and data analysis. But that's not all. When you enrol in IT Skills for Business Level 3 Bundle, you'll receive 30 CPD-Accredited PDF Certificates, Hard Copy Certificates, and our exclusive student ID card, all absolutely free. Why Prefer this Course? Get a Free CPD Accredited Certificate upon completion of the course Get a Free Student ID Card with this training program (£10 postal charge will be applicable for international delivery) The course is Affordable and Simple to understand Get Lifetime Access to the course materials The training program comes with 24/7 Tutor Support Start your learning journey straight away! The "IT Skills for Business Level 3" course bundle is an invaluable resource for anyone looking to deepen their understanding and expertise in the diverse fields of IT and Business Analysis. This course offers learners the chance to master foundational IT Support Skills, such as Building their Own Computers and troubleshooting various software issues, providing a solid base from which to expand their knowledge into more specialised areas. Further advancement is facilitated through detailed modules focusing on Business Analysis Skills, teaching learners how to translate complex business needs into scalable IT solutions. This integration of IT proficiency with Business knowledge ensures that participants are well-prepared to tackle strategic challenges, making them invaluable assets to any organisation. By blending IT skills with an understanding of business processes and Data Analysis, this course sets up its participants for success in multiple pathways, from Network Management to Business Consulting. Moreover, this diploma offers learners the opportunity to acquire a Recognised Qualification that is highly valued in the field of IT / Business. With this Certification, graduates are better positioned to pursue career advancement and higher responsibilities within the IT / Business setting. The skills and knowledge gained from this course will enable learners to make meaningful contributions to IT / Business related fields impacting their IT / Business experiences and long-term development. Course Curriculum Course 01: IT Support Technician Module 01: Software Module 02: Hardware Module 03: Security Module 04: Networking Module 05: Basic IT Literacy Course 02: Functional Skills IT Module 01: How People Use Computers Module 02: System Hardware Module 03: Device Ports And Peripherals Module 04: Data Storage And Sharing Module 05: Understanding Operating Systems Module 06: Setting Up And Configuring A PC Module 07: Setting Up And Configuring A Mobile Device Module 08: Managing Files Module 09: Using And Managing Application Software Module 10: Configuring Network And Internet Connectivity Module 11: IT Security Threat Mitigation Module 12: Computer Maintenance And Management Module 13: IT Troubleshooting Module 14: Understanding Databases Module 15: Developing And Implementing Software Course 03: Building Your Own Computer Course Module 01: Introduction to Computer & Building PC Module 02: Overview of Hardware and Parts Module 03: Building the Computer Module 04: Input and Output Devices Module 05: Software Installation Module 06: Computer Networking Module 07: Building a Gaming PC Module 08: Maintenance of Computers =========>>>>> And 27 More Courses <<<<<========= How will I get my Certificate? After successfully completing the course, you will be able to order your Certificates as proof of your achievement. PDF Certificate: Free (Previously it was £12.99*30 = £390) CPD Hard Copy Certificate: Free (For The First Course: Previously it was £29.99) CPD 300 CPD hours / points Accredited by CPD Quality Standards Who is this course for? Anyone interested in learning more about the topic is advised to take this bundle. This bundle is ideal for: Aspiring IT professionals. Business analysts. Data scientists. System administrators. Network security specialists. Database managers. Requirements You will not need any prior background or expertise to enrol in this course. Career path After completing this bundle, you are to start your career or begin the next phase of your career. IT Support Specialist: $35,000 - $60,000 Cyber Security Analyst: $60,000 - $100,000 Network Engineer: $50,000 - $90,000 Data Analyst: $45,000 - $85,000 Software Developer: $50,000 - $120,000 Database Administrator: $60,000 - $110,000 Certificates CPD Accredited Digital certificate Digital certificate - Included CPD Accredited e-Certificate - Free Enrolment Letter - Free Student ID Card - Free CPD Accredited Hard copy certificate Hard copy certificate - Included If you are an international student, then you have to pay an additional 10 GBP for each certificate as an international delivery charge.

Sale Ends Today JavaScript Application Programming - CPD Certified Admission Gifts FREE PDF & Hard Copy Certificate| PDF Transcripts| FREE Student ID| Assessment| Lifetime Access| Enrolment Letter Till date, JavaScript remains the backbone of interactive web applications worldwide, with over 95% of all websites using it in some form. In the rapidly evolving digital landscape, mastering JavaScript is more than a skill, rather, it's a necessity. The JavaScript Application Programming bundle is designed to transform you from a novice to a master developer, and elevate your programming skills to set the web on fire. The JavaScript Application Programming bundle provides a comprehensive exploration into JavaScript and its powerful ecosystem. Starting with JavaScript Foundations for Everyone, the course progressively covers advanced topics such as JavaScript Functions and JavaScript Promises, ensuring a deep understanding of core concepts. Additional modules like jQuery, WebGL 3D Programming, and Web GIS Application Development with ASP.NET CORE MVC expand your skillset into creating dynamic, data-driven web applications along with courses in other essential languages such as Python, C#, and SQL. Courses Are Included In This JavaScript Application Programming - CPD Certified Bundle: Course 01: JavaScript Foundations for Everyone Course 02: JavaScript Functions Course 03: JavaScript Promises Course 04: jQuery: JavaScript and AJAX Coding Bible Course 05: Java Certification Cryptography Architecture Course 06: Master JavaScript with Data Visualisation Course 07: Coding Essentials - Javascript, ASP. Net, C# - Bonus HTML Course 08: Kotlin Programming: Android Coding Bible Course 09: Secure Programming of Web Applications Course 10: Web GIS Application Development with C# ASP.NET CORE MVC and Leaflet Course 11: Mastering SQL Programming Course 12: Complete Microsoft SQL Server from Scratch: Bootcamp Course 13: Ultimate PHP & MySQL Web Development & OOP Coding Course 14: SQL for Data Science, Data Analytics and Data Visualisation Course 15: Quick Data Science Approach from Scratch Course 16: Python Programming Bible Course 17: Python Programming from Scratch with My SQL Database Course 18: Machine Learning with Python Course Course 19: HTML Web Development Crash Course Course 20: CSS Web Development Course 21: Three.js & WebGL 3D Programming Course 22: Basics of WordPress: Create Unlimited Websites Course 23: Masterclass Bootstrap 5 Course - Responsive Web Design Course 24: C++ Development: The Complete Coding Guide Course 25: C# Basics Course 26: C# Programming - Beginner to Advanced Course 27: Stripe with C# Course 28: C# Console and Windows Forms Development with LINQ & ADO.NET Course 29: Cyber Security Incident Handling and Incident Response Course 30: Computer Networks Security from Scratch to Advanced Don't miss the chance to become a versatile and highly skilled software developer with our JavaScript Application Programming course. Aim to start your own tech venture, work for a top tech company, or freelance as a developer, this course will provide you with the skills needed to succeed. Enrol today to take the first step towards a lucrative career in software development, where your ability to innovate and solve complex problems will shape the future of technology! Learning Outcomes of this Bundle: Master fundamental and advanced JavaScript programming techniques. Learn to implement interactive web features using jQuery and AJAX. Develop proficiency in data visualisation with JavaScript. Understand and apply Python, C#, and SQL in web development contexts. Create responsive and secure web applications using Bootstrap and C#. Manage and deploy sophisticated web-based GIS applications. With this JavaScript Application Programming - CPD Certified course, you will get 30 CPD Accredited PDF Certificates, a Hard Copy Certificate and our exclusive student ID card absolutely free. Why Prefer this Course? Get a Free CPD Accredited Certificate upon completion of the course Get a Free Student ID Card with this training program (£10 postal charge will be applicable for international delivery) The course is Affordable and Simple to understand Get Lifetime Access to the course materials The training program comes with 24/7 Tutor Support Start your learning journey straight away! The JavaScript Application Programming course bundle is an exceptional resource for those looking to dive deep into the world of modern web development. By mastering fundamental and advanced JavaScript programming techniques, learners will gain the ability to create dynamic and interactive web applications that are both efficient and visually appealing. This foundational knowledge is essential, as JavaScript remains a critical tool for front-end development, enabling developers to implement complex features that improve user experience and site functionality. Beyond the basics, the course introduces students to data visualisation with JavaScript, which is an increasingly important skill in the tech industry. Additionally, the inclusion of frameworks like jQuery and Bootstrap ensures that learners can streamline their coding process and design responsive layouts that adapt to different devices, a must-have in today's mobile-first world. Learners will also develop proficiency in other programming languages such as Python, C#, and SQL, which are integral to backend development and database management. Moreover, this diploma offers learners the opportunity to acquire a Recognised Qualification that is highly valued in the field of JavaScript. With this Certification, graduates are better positioned to pursue career advancement and higher responsibilities within the JavaScript setting. The skills and knowledge gained from this course will enable learners to make meaningful contributions to JavaScript related fields impacting their JavaScript experiences and long-term development. Course Curriculum Course 01: JavaScript Foundations for Everyone Module 01: About the Author Module 02: Introduction to JavaScript Module 03: Strengths and Weaknesses of JavaScript Module 04: Writing JavaScript in Chrome Module 05: JavaScript Variables Module 06: Demo of JavaScript Variables Module 07: Basic Types of JavaScript Module 08: JavaScript Boolean Module 09: JavaScript Strings Module 10: JavaScript Numbers Module 11: JavaScript Objects Module 12: Demo of JavaScript Objects Module 13: JavaScript Arrays Module 14: Demo of JavaScript Arrays Module 15: JavaScript Functions Module 16: Demo of JavaScript Functions Module 17: JavaScript Scope and Hoisting Module 18: Demo of JavaScript Scope and Hoisting Module 19: Currying Functions Module 20: Demo of Currying Functions Module 21: Timeouts and Callbacks Module 22: Demo of Timeouts and Callbacks Module 23: JavaScript Promises Module 24: Demo of JavaScript Promises Module 25: Demo of Javascript Async Module 26: Flow Control Module 27: Demo of Flow Control Module 28: JavaScript For Loop Module 29: Demo of JavaScript For Loop Module 30: Demo of Switch Statements Module 31: Error Handling Module 32: Demo Project Course 02: JavaScript Functions Module 01: Introduction Module 02: Defining And Invoking Functions Module 03: Function Scope Module 04: Composing Functions Module 05: Asynchronous Functions Course 03: JavaScript Promises Module 01: JavaScript Promises Module 02: Understanding Promises Module 03: Using Promises Module 04: Multiple Promises Module 05: Handling Errors With Promises =========>>>>> And 27 More Courses <<<<<========= How will I get my Certificate? After successfully completing the course, you will be able to order your Certificates as proof of your achievement. PDF Certificate: Free (Previously it was £12.99*30 = £390) CPD Hard Copy Certificate: Free (For The First Course: Previously it was £29.99) CPD 300 CPD hours / points Accredited by CPD Quality Standards Who is this course for? Anyone interested in learning more about the topic is advised to take this bundle. This bundle is ideal for: Aspiring software developers. Web developers. Computer science students. Tech industry professionals. Entrepreneurs in tech. Career changers. Requirements You will not need any prior background or expertise to enrol in this course. Career path After completing this bundle, you are to start your career or begin the next phase of your career. Web Developer: $40,000 - $85,000 Software Developer: $50,000 - $120,000 Full-Stack Developer: $60,000 - $120,000 Data Analyst: $45,000 - $85,000 Systems Engineer: $60,000 - $130,000 Cyber Security Analyst: $60,000 - $100,000 Certificates CPD Accredited Digital certificate Digital certificate - Included CPD Accredited e-Certificate - Free Enrolment Letter - Free Student ID Card - Free CPD Accredited Hard Copy Certificate Hard copy certificate - Included Please note that International students have to pay an additional £10 as a shipment fee.

Deep Learning - Computer Vision for Beginners Using PyTorch

By Packt

In this course, you will be learning one of the widely used deep learning frameworks, that is, PyTorch, and learn the basics of convolutional neural networks in PyTorch. We will also cover the basics of Python and understand how to implement different Python libraries.

Everywhere, from tiny businesses to major corporations, needs people skilled in SQL. In light of this, our online training course has been developed to help you succeed by equipping you with all the necessary skills. The importance of mastering SQL increases if you're looking for your first job in the data industry. You will learn about topics such as SQL fundamentals, data wrangling, SQL analysis, AB testing, distributed computing with Apache Spark, Delta Lake, and more through four increasingly more challenging SQL projects with data science applications. These subjects will equip you with the skills necessary to use SQL creatively for data analysis and exploration, write queries quickly, produce datasets for data analysis, conduct feature engineering, integrate SQL with other data analysis and machine learning toolsets, and work with unstructured data. This Specialisation is designed for a learner with little or no prior coding expertise who wants to become proficient with SQL queries. Experts have meticulously planned out the curriculum for the SQL Skills Training course with years of expertise. As a result, you will find it simple to learn the course material. Learning outcome After finishing the course, you'll Learn to utilise the tools for view creation Become familiar with updating columns and indexed views Be able to test and debug Be able to search a database using SQL Become more familiar with inline table-valued functions Learn the fundamentals of transactions and multiple statements Why Prefer US? Opportunity to earn a certificate accredited by CPD after completing this course Student ID card with amazing discounts - completely for FREE! (£10 postal charges will be applicable for international delivery) Standards-aligned lesson planning Innovative and engaging content and activities Assessments that measure higher-level thinking and skills Complete the program in your own time, at your own pace Each of our students gets full 24/7 tutor support *** Course Curriculum *** SQL Programming Course Module 01: Course Introduction Introduction Course Curriculum Overview Overview of Databases Module 02: SQL Environment Setup MySQL Installation MySQL Workbench Installation Connecting to MySQL using Console Module 03: SQL Statement Basics Overview of Challenges SQL Statement Basic SELECT Statement SELECT DISTINCT Column AS Statement COUNT built-in Method usage SELECT WHERE Clause - Part One SELECT WHERE Clause - Part Two Statement Basic Limit Clause Statement Using BETWEEN with Same Column Data How to Apply IN Operator Wildcard Characters with LIKE and ILIKE Module 04: GROUP BY Statements Overview of GROUP BY Aggregation function SUM() Aggregation MIN() and MAX() GROUP BY - One GROUP BY - Two HAVING Clause Module 05: JOINS Overview of JOINS Introduction to JOINS AS Statement table INNER Joins FULL Outer Join LEFT Outer JOIN RIGHT JOIN Union Module 06: Advanced SQL Commands / Statements Timestamps EXTRACT from timestamp Mathematical Functions String Functions SUBQUERY Module 07: Creating Database and Tables Basic of Database and Tables Data Types Primary key and Foreign key Create Table in SQL Script Insert Update Delete Alter Table Drop-Table NOT NULL Constraint UNIQUE Constraint Module 08: Databases and Tables Creating a Database backup 10a Overview of Databases and Tables 10c Restoring a Database CPD 10 CPD hours / points Accredited by CPD Quality Standards Who is this course for? The course can be helpful for anyone working in the SQL fields, whether self-employed or employed, regardless of their career level. Requirements You will not need any prior background or expertise to enrol in this course. Career path The vocation of SQL Skills Training moves very quickly but pays well. This position provides unparalleled satisfaction. This is your opportunity to learn more and start changing things. Query Language Developer Server Database Manager Python Developer Technical Consultant Project Implementation Manager Software Developer (SQL) Certificates Certificate of completion Digital certificate - £10

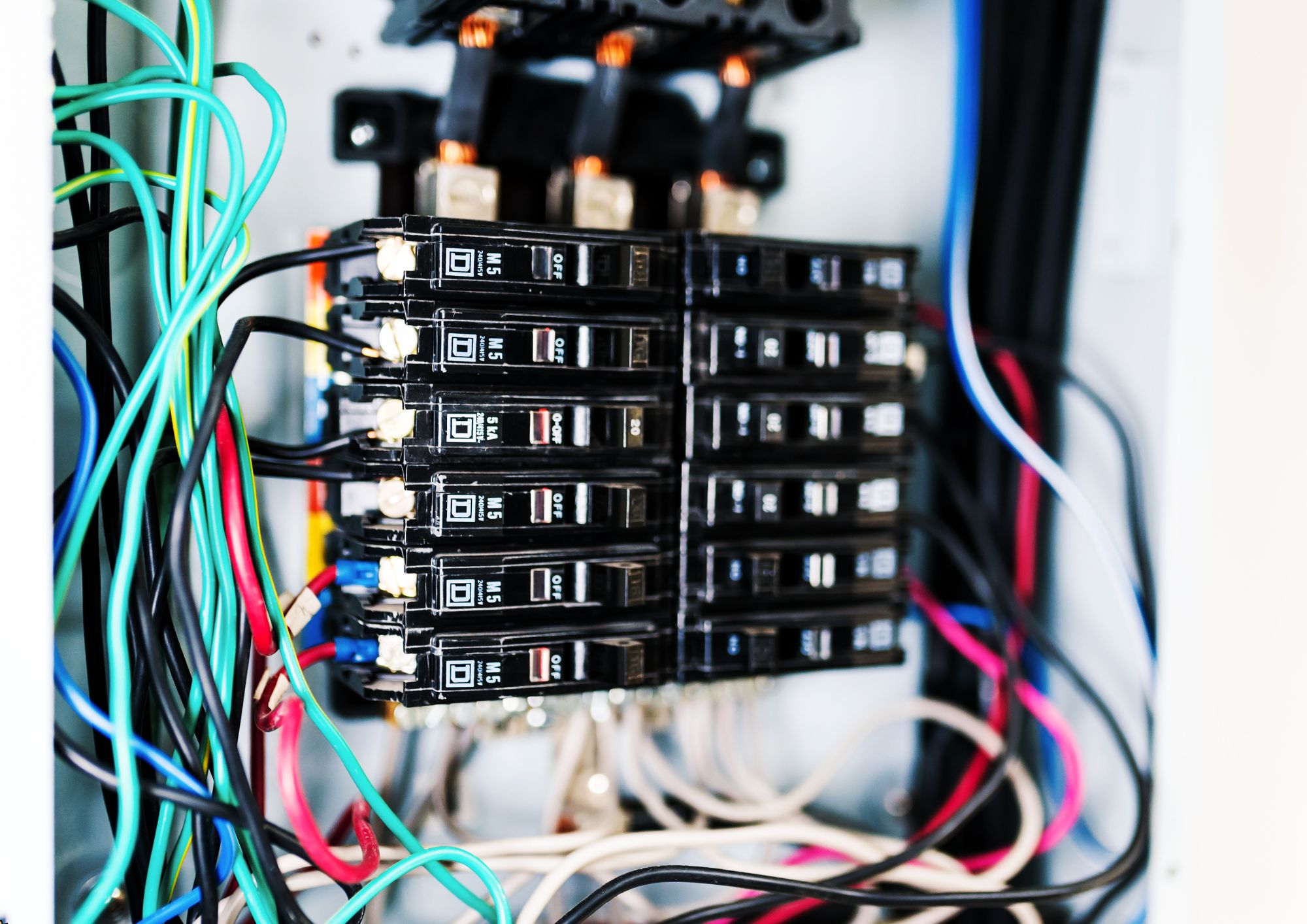

Digital Electrical Circuits and Intelligence Electrical Devices

By Compete High

Title: Mastering the Future: Digital Electrical Circuits and Intelligence Electrical Devices Course Overview: Welcome to a transformative journey into the cutting-edge realm of 'Digital Electrical Circuits and Intelligence Electrical Devices.' In today's rapidly evolving technological landscape, the demand for skilled professionals who can navigate the complexities of digital circuits and intelligent devices is greater than ever. This comprehensive course is designed to equip you with the knowledge and skills needed to not only understand but also master the intricacies of digital electrical circuits and harness the power of intelligent electrical devices. Key Features: Comprehensive Curriculum: Our course covers a wide spectrum of topics, from the fundamentals of digital electrical circuits to the advanced principles behind intelligent electrical devices. You'll delve into topics such as digital signal processing, microcontrollers, embedded systems, and more. Hands-On Learning: Theory comes to life through hands-on projects and practical exercises. You'll have the opportunity to apply your knowledge in real-world scenarios, ensuring that you not only understand the concepts but can also implement them effectively. Cutting-Edge Technologies: Stay ahead of the curve by learning about the latest advancements in digital circuits and intelligent devices. Explore the world of artificial intelligence, machine learning, and the Internet of Things (IoT) to understand how they intersect with electrical engineering. Expert Instruction: Benefit from the guidance of experienced instructors who bring a wealth of industry knowledge to the classroom. Our instructors are dedicated to your success, providing support and insights throughout the course. Industry-Relevant Projects: Gain practical experience by working on projects that mirror real-world challenges faced by professionals in the field. Build a portfolio that showcases your abilities and sets you apart in a competitive job market. Networking Opportunities: Connect with like-minded individuals and industry experts through networking events, forums, and collaborative projects. Expand your professional network and open doors to new opportunities. Flexible Learning: Designed to accommodate various schedules, our course offers flexibility through online lectures and resources. Access course materials at your own pace, allowing you to balance your learning with other commitments. Certification: Upon successful completion of the course, receive a recognized certification that validates your expertise in digital electrical circuits and intelligent electrical devices, enhancing your credibility in the job market. Whether you're a seasoned professional looking to upskill or a student aspiring to enter the field of electrical engineering, our 'Digital Electrical Circuits and Intelligence Electrical Devices' course provides the knowledge and practical experience needed to thrive in the dynamic world of technology. Enroll now to embark on a journey towards mastering the future of electrical engineering! Course Curriculum Introduction To Digital Electric Circuits Introduction To Digital Electric Circuits 00:00 Numbering Systems Numbering Systems 00:00 Binary Arithmetic Binary Arithmetic 00:00 Logic Gates Logic Gates 00:00 Flip-Flops Flip-Flops 00:00 Counters Shift Registers Counters Shift Registers 00:00 Adders Adders 00:00

Build Structures in Portuguese Course

By One Education

Ever wondered how to speak confidently about buildings, materials, and construction terms—in Portuguese? This course is your blueprint to building language skills tailored to the world of structural design and architecture. From concrete columns to roofing terms, you'll learn how to talk structures with precision and clarity—all in Portuguese. Whether you're a construction enthusiast, a professional working with Portuguese-speaking clients, or simply keen to expand your vocabulary, this course is structured to help you build fluency without ever picking up a hammer. Expect engaging modules that introduce you to the foundations of structural language—from everyday construction phrases to technical expressions. It's not about laying bricks; it's about laying down words that matter. Delivered entirely online, this course offers you the flexibility to learn from wherever you are, while gaining knowledge that’s both specific and linguistically sharp. If structure speaks to you, let it speak Portuguese too. Learning Outcomes: Gain a solid understanding of artificial neural networks and their applications in deep learning. Learn how to install the necessary packages and preprocess data for neural network training. Discover how to encode data and build your own artificial neural network using Python. Understand the steps involved in making predictions using your neural network model. Learn how to deal with imbalanced data in your neural network training. The Project on Deep Learning - Artificial Neural Network course is designed to provide you with the skills and knowledge you need to build your own neural network and perform complex tasks using deep learning. You'll learn how to install the necessary packages, preprocess data, and encode data for neural network training. You'll also gain a deeper understanding of artificial neural networks and learn how to build your own model using Python. By the end of the course, you'll be able to make predictions using your neural network model and understand how to deal with imbalanced data in your training. Build Structures in Portuguese Course Curriculum Introduction Section 01: Chapter 1 Section 02: Chapter 2 Section 03: Chapter 3 Section 04: Chapter 4 Section 05: Chapter 5 Section 06: Chapter 6 Section 07: Chapter 7 Section 08: Chapter 8 Section 09: Chapter 9 Section 10: Chapter 10 How is the course assessed? Upon completing an online module, you will immediately be given access to a specifically crafted MCQ test. For each test, the pass mark will be set to 60%. Exam & Retakes: It is to inform our learners that the initial exam for this online course is provided at no additional cost. In the event of needing a retake, a nominal fee of £9.99 will be applicable. Certification Upon successful completion of the assessment procedure, learners can obtain their certification by placing an order and remitting a fee of __ GBP. £9 for PDF Certificate and £15 for the Hardcopy Certificate within the UK ( An additional £10 postal charge will be applicable for international delivery). CPD 10 CPD hours / points Accredited by CPD Quality Standards Who is this course for? Data analysts who want to expand their skills in deep learning and artificial neural networks. Programmers who want to learn how to build their own neural network models for advanced tasks. Entrepreneurs who want to develop their own deep learning-based applications. Students who want to enhance their skills in deep learning and prepare for a career in the field. Anyone who wants to explore the world of artificial neural networks and deep learning projects. Career path Data Analyst: £24,000 - £45,000 Machine Learning Engineer: £28,000 - £65,000 Deep Learning Engineer: £30,000 - £75,000 Technical Lead: £40,000 - £90,000 Chief Technology Officer: £90,000 - £250,000 Certificates Certificate of completion Digital certificate - £9 You can apply for a CPD Accredited PDF Certificate at the cost of £9. Certificate of completion Hard copy certificate - £15 Hard copy can be sent to you via post at the expense of £15.

Apache Spark 3 Advance Skills for Cracking Job Interviews

By Packt

A carefully structured advanced-level course on Apache Spark 3 to help you clear your job interviews. This course covers advanced topics and concepts that are part of the Databricks Spark certification exam. Boost your skills in Spark 3 architecture and memory management.

Mechanical Engineering, Mechatronics Engineering & Energy Engineer - 30 Courses Bundle

By NextGen Learning

Get ready for an exceptional online learning experience with the Mechanical Engineering, Mechatronics Engineering & Energy Engineer bundle! This carefully curated collection of 30 premium courses is designed to cater to a variety of interests and disciplines. Dive into a sea of knowledge and skills, tailoring your learning journey to suit your unique aspirations. The Mechanical And Mechatronics Engineering & Energy Engineer is a dynamic package, blending the expertise of industry professionals with the flexibility of digital learning. It offers the perfect balance of foundational understanding and advanced insights. Whether you're looking to break into a new field or deepen your existing knowledge, the Mechanical Engineering, Mechatronics Engineering & Energy Engineer package has something for everyone. As part of the Mechanical And Mechatronics Engineering & Energy Engineer package, you will receive complimentary PDF certificates for all courses in this bundle at no extra cost. Equip yourself with the Mechanical And Mechatronics Engineering & Energy Engineer bundle to confidently navigate your career path or personal development journey. Enrol today and start your career growth! This Bundle Comprises the Following Mechanical Engineering, Mechatronics Engineering & Energy Engineer CPD Accredited Courses: Course 01: Mechanical Engineering Course 02: Engineering Mechanics Course for Beginners Course 03: Crack Your Mechanical Engineer Interview Course 04: Automotive Engineering: Onboard Diagnostics Course 05: Automotive Design Course 06: Hybrid Vehicle Expert Training Course 07: Engine Lubrication Systems Online Course Course 08: Rotating Machines Course 09: Electric Circuits for Electrical Engineering Course 10: Electrical Machines for Electrical Engineering Course 11: Electronic & Electrical Devices Maintenance & Troubleshooting Course 12: Digital Electric Circuits & Intelligent Electrical Devices Course 13: MATLAB Simulink for Electrical Power Engineering Course 14: Power Electronics for Electrical Engineering Course 15: Energy Engineer Course Course 16: Energy Saving in Electric Motors Course 17: Electric Vehicle Battery Management System Course 18: A complete course on Turbocharging Course 19: Heating Ventilation and AirConditioning (HVAC) Technician Course 20: Python Intermediate Training Course 21: Spatial Data Visualisation and Machine Learning in Python Course 22: Data Center Training Essentials: Mechanical & Cooling Course 23: Career Development Plan Fundamentals Course 24: CV Writing and Job Searching Course 25: Learn to Level Up Your Leadership Course 26: Networking Skills for Personal Success Course 27: Ace Your Presentations: Public Speaking Masterclass Course 28: Learn to Make a Fresh Start in Your Life Course 29: Motivation - Motivating Yourself & Others Course 30: Excel: Top 50 Microsoft Excel Formulas in 50 Minutes! What will make you stand out? Upon completion of this online Mechanical Engineering, Mechatronics Engineering & Energy Engineer bundle, you will gain the following: CPD QS Accredited Proficiency with this Mechanical And Mechatronics Engineering & Energy Engineer After successfully completing the Mechanical Engineering, Mechatronics Engineering & Energy Engineer bundle, you will receive a FREE CPD PDF Certificates as evidence of your newly acquired abilities. Lifetime access to the whole collection of learning materials of this Mechanical And Mechatronics Engineering & Energy Engineer . The online test with immediate results You can study and complete the Mechanical And Mechatronics Engineering & Energy Engineer bundle at your own pace. Study for the Mechanical And Mechatronics Engineering & Energy Engineer bundle using any internet-connected device, such as a computer, tablet, or mobile device. Each course in this Mechanical Engineering, Mechatronics Engineering & Energy Engineer bundle holds a prestigious CPD accreditation, symbolising exceptional quality. The materials, brimming with knowledge, are regularly updated, ensuring their relevance. This bundle promises not just education but an evolving learning experience. Engage with this extraordinary collection, and prepare to enrich your personal and professional development. Embrace the future of learning with the Mechanical And Mechatronics Engineering & Energy Engineer, a rich anthology of 30 diverse courses. Each course in the Mechanical Engineering, Mechatronics Engineering & Energy Engineer bundle is handpicked by our experts to ensure a wide spectrum of learning opportunities. ThisMechanical And Mechatronics Engineering & Energy Engineer bundle will take you on a unique and enriching educational journey. The bundle encapsulates our mission to provide quality, accessible education for all. Whether you are just starting your career, looking to switch industries, or hoping to enhance your professional skill set, the Mechanical And Mechatronics Engineering & Energy Engineer bundle offers you the flexibility and convenience to learn at your own pace. Make the Mechanical And Mechatronics Engineering & Energy Engineer package your trusted companion in your lifelong learning journey. CPD 300 CPD hours / points Accredited by CPD Quality Standards Who is this course for? The Mechanical Engineering, Mechatronics Engineering & Energy Engineer bundle is perfect for: Lifelong learners looking to expand their knowledge and skills. Professionals seeking to enhance their career with CPD certification. Individuals wanting to explore new fields and disciplines. Anyone who values flexible, self-paced learning from the comfort of home. Requirements You are cordially invited to enroll in this bundle; please note that there are no formal prerequisites or qualifications required. We've designed this curriculum to be accessible to all, irrespective of prior experience or educational background. Career path Unleash your potential with the Mechanical Engineering, Mechatronics Engineering & Energy Engineer bundle. Acquire versatile skills across multiple fields, foster problem-solving abilities, and stay ahead of industry trends. Ideal for those seeking career advancement, a new professional path, or personal growth. Embrace the journey with thisbundle package. Certificates CPD Quality Standard Certificate Digital certificate - Included 30 CPD Accredited Digital Certificates and A Hard Copy Certificate

Cloud Infrastructure Engineer with CompTIA & Cyber Security- 30 CPD Certified Courses!

By NextGen Learning

Get ready for an exceptional online learning experience with the Cloud Infrastructure Engineer with CompTIA & Cyber Securitybundle! This carefully curated collection of 30 premium courses is designed to cater to a variety of interests and disciplines. Dive into a sea of knowledge and skills, tailoring your learning journey to suit your unique aspirations. The Cloud Infrastructure Engineer with CompTIA & Cyber Security is a dynamic package, blending the expertise of industry professionals with the flexibility of digital learning. It offers the perfect balance of foundational understanding and advanced insights. Whether you're looking to break into a new field or deepen your existing knowledge, the Cloud Infrastructure Engineer with CompTIA & Cyber Security package has something for everyone. As part of the Cloud Infrastructure Engineer with CompTIA & Cyber Security package, you will receive complimentary PDF certificates for all courses in this bundle at no extra cost. Equip yourself with the Cloud Infrastructure Engineer with CompTIA & Cyber Security bundle to confidently navigate your career path or personal development journey. Enrol today and start your career growth! This Bundle Comprises the Following Cloud Infrastructure Engineer with CompTIA & Cyber SecurityCPD Accredited Courses: Course 01: Cloud Computing / CompTIA Cloud+ (CV0-002) Course 02: Exam Prep: AWS Certified Solutions Architect Associate 2021 Course 03: Data Center Training Essentials: General Introduction Course 04: Data Center Training Essentials: Mechanical & Cooling Course 05: Internet of Things Course 06: Web Application Penetration Testing Course Course 07: Google Cloud for Beginners Course 08: MySQL Database Development Mastery Course 09: Microsoft Azure Cloud Concepts Course 10: Azure Machine Learning Course 11: Cyber Security Incident Handling and Incident Response Course 12: IT Administration and Networking Course 13: CompTIA Network+ Certification (N10-007) Course 14: CompTIA CySA+ Cybersecurity Analyst (CS0-002) Course 15: Learn Ethical Hacking From A-Z: Beginner To Expert Course 16: CompTIA IT Fundamentals ITF+ (FCO-U61) Course 17: CISRM - Certified Information Systems Risk Manager Course 18: Quick Data Science Approach from Scratch Course 19: Project on Deep Learning - Artificial Neural Network Course 20: Deep Learning Neural Network with R Course 21: Cyber Security Awareness Training Course 22: CompTIA A+ (220-1001) Course 23: Computer Networks Security from Scratch to Advanced Course 24: Career Development Plan Fundamentals Course 25: CV Writing and Job Searching Course 26: Learn to Level Up Your Leadership Course 27: Networking Skills for Personal Success Course 28: Excel: Top 50 Microsoft Excel Formulas in 50 Minutes! Course 29: Decision Making and Critical Thinking Course 30: Time Management Training - Online Course What will make you stand out? Upon completion of this online Cloud Infrastructure Engineer with CompTIA & Cyber Security bundle, you will gain the following: CPD QS Accredited Proficiency with this Cloud Infrastructure Engineer with CompTIA & Cyber Security bundle After successfully completing the Cloud Infrastructure Engineer with CompTIA & Cyber Security bundle, you will receive a FREE CPD PDF Certificates as evidence of your newly acquired abilities. Lifetime access to the whole collection of learning materials of this Cloud Infrastructure Engineer with CompTIA & Cyber Security bundle The online test with immediate results You can study and complete the Cloud Infrastructure Engineer with CompTIA & Cyber Security bundle at your own pace. Study for the Cloud Infrastructure Engineer with CompTIA & Cyber Security bundle using any internet-connected device, such as a computer, tablet, or mobile device. Each course in this Cloud Infrastructure Engineer with CompTIA & Cyber Security bundle holds a prestigious CPD accreditation, symbolising exceptional quality. The materials, brimming with knowledge, are regularly updated, ensuring their relevance. This bundle promises not just education but an evolving learning experience. Engage with this extraordinary collection, and prepare to enrich your personal and professional development. Embrace the future of learning with the Cloud Infrastructure Engineer with CompTIA & Cyber Security, a rich anthology of 30 diverse courses. Each course in the Cloud Infrastructure Engineer with CompTIA & Cyber Security bundle is handpicked by our experts to ensure a wide spectrum of learning opportunities. ThisCloud Infrastructure Engineer with CompTIA & Cyber Security bundle will take you on a unique and enriching educational journey. The bundle encapsulates our mission to provide quality, accessible education for all. Whether you are just starting your career, looking to switch industries, or hoping to enhance your professional skill set, the Cloud Infrastructure Engineer with CompTIA & Cyber Security bundle offers you the flexibility and convenience to learn at your own pace. Make the Cloud Infrastructure Engineer with CompTIA & Cyber Security package your trusted companion in your lifelong learning journey. CPD 300 CPD hours / points Accredited by CPD Quality Standards Who is this course for? The Cloud Infrastructure Engineer with CompTIA & Cyber Security bundle is perfect for: Lifelong learners looking to expand their knowledge and skills. Professionals seeking to enhance their career with CPD certification. Individuals wanting to explore new fields and disciplines. Anyone who values flexible, self-paced learning from the comfort of home. Requirements You are cordially invited to enroll in this bundle; please note that there are no formal prerequisites or qualifications required. We've designed this curriculum to be accessible to all, irrespective of prior experience or educational background. Career path Unleash your potential with the Cloud Infrastructure Engineer with CompTIA & Cyber Security bundle. Acquire versatile skills across multiple fields, foster problem-solving abilities, and stay ahead of industry trends. Ideal for those seeking career advancement, a new professional path, or personal growth. Embrace the journey with the CompTIA & Cyber Securitybundle package. Certificates CPD Quality Standard Certificate Digital certificate - Included 30 CPD Quality Standard Certificates - Free

Search By Location

- machine learning Courses in London

- machine learning Courses in Birmingham

- machine learning Courses in Glasgow

- machine learning Courses in Liverpool

- machine learning Courses in Bristol

- machine learning Courses in Manchester

- machine learning Courses in Sheffield

- machine learning Courses in Leeds

- machine learning Courses in Edinburgh

- machine learning Courses in Leicester

- machine learning Courses in Coventry

- machine learning Courses in Bradford

- machine learning Courses in Cardiff

- machine learning Courses in Belfast

- machine learning Courses in Nottingham