- Professional Development

- Medicine & Nursing

- Arts & Crafts

- Health & Wellbeing

- Personal Development

54 Courses delivered Live Online

FinTech and Big Data Analytics

By NextGen Learning

Course Overview: The "FinTech and Big Data Analytics" course provides an in-depth exploration of the dynamic intersection between financial technology (FinTech) and big data. Learners will gain essential knowledge about the innovative solutions disrupting the financial services industry, such as cryptocurrencies, insurtech, and regtech. The course offers insights into the tools, technologies, and trends shaping the future of finance, with a specific focus on how big data analytics is transforming business models and decision-making. By the end of the course, learners will have a comprehensive understanding of FinTech's growth and its applications, enabling them to make informed decisions in this rapidly evolving field. Course Description: This course delves deeper into the core concepts of financial technology and big data, exploring the impact of FinTech innovations on traditional financial systems. Topics covered include the rise of cryptocurrencies, regulatory technology (RegTech), the development of insurance technologies (InsurTech), and the use of big data in reshaping business strategies. Learners will explore the key technologies that drive FinTech, such as blockchain, artificial intelligence (AI), and machine learning, and learn how they enable data-driven decision-making in finance. The course prepares learners for the evolving future of FinTech, equipping them with the necessary skills to understand and navigate this transformative landscape. Course Modules: Module 01: Introduction to Financial Technology – FinTech Module 02: Exploring Cryptocurrencies Module 03: RegTech Module 04: Rise of InsurTechs Module 05: Big Data Basics: Understanding Big Data Module 06: The Future of FinTech (See full curriculum) Who is this course for? Individuals seeking to understand the financial technology landscape. Professionals aiming to advance their careers in the rapidly evolving FinTech sector. Beginners with an interest in emerging financial technologies and data analytics. Entrepreneurs looking to innovate within the financial services industry. Career Path: Financial Analyst FinTech Specialist Data Analyst in Financial Services Blockchain Developer RegTech Consultant InsurTech Specialist Big Data Analyst in Finance

CompTIA Data+

By Nexus Human

Duration 5 Days 30 CPD hours Overview Mining data Manipulating data Visualizing and reporting data Applying basic statistical methods Analyzing complex datasets while adhering to governance and quality standards throughout the entire data life cycle CompTIA Data+ is an early-career data analytics certification for professionals tasked with developing and promoting data-driven business decision-making. CompTIA Data+ gives you the confidence to bring data analysis to life. As the importance for data analytics grows, more job roles are required to set context and better communicate vital business intelligence. Collecting, analyzing, and reporting on data can drive priorities and lead business decision-making. 1 - Identifying Basic Concepts of Data Schemas Identify Relational and Non-Relational Databases Understand the Way We Use Tables, Primary Keys, and Normalization 2 - Understanding Different Data Systems Describe Types of Data Processing and Storage Systems Explain How Data Changes 3 - Understanding Types and Characteristics of Data Understand Types of Data Break Down the Field Data Types 4 - Comparing and Contrasting Different Data Structures, Formats, and Markup Languages Differentiate between Structured Data and Unstructured Data Recognize Different File Formats Understand the Different Code Languages Used for Data 5 - Explaining Data Integration and Collection Methods Understand the Processes of Extracting, Transforming, and Loading Data Explain API/Web Scraping and Other Collection Methods Collect and Use Public and Publicly-Available Data Use and Collect Survey Data 6 - Identifying Common Reasons for Cleansing and Profiling Data Learn to Profile Data Address Redundant, Duplicated, and Unnecessary Data Work with Missing Value Address Invalid Data Convert Data to Meet Specifications 7 - Executing Different Data Manipulation Techniques Manipulate Field Data and Create Variables Transpose and Append Data Query Data 8 - Explaining Common Techniques for Data Manipulation and Optimization Use Functions to Manipulate Data Use Common Techniques for Query Optimization 9 - Applying Descriptive Statistical Methods Use Measures of Central Tendency Use Measures of Dispersion Use Frequency and Percentages 10 - Describing Key Analysis Techniques Get Started with Analysis Recognize Types of Analysis 11 - Understanding the Use of Different Statistical Methods Understand the Importance of Statistical Tests Break Down the Hypothesis Test Understand Tests and Methods to Determine Relationships Between Variables 12 - Using the Appropriate Type of Visualization Use Basic Visuals Build Advanced Visuals Build Maps with Geographical Data Use Visuals to Tell a Story 13 - Expressing Business Requirements in a Report Format Consider Audience Needs When Developing a Report Describe Data Source Considerations For Reporting Describe Considerations for Delivering Reports and Dashboards Develop Reports or Dashboards Understand Ways to Sort and Filter Data 14 - Designing Components for Reports and Dashboards Design Elements for Reports and Dashboards Utilize Standard Elements Creating a Narrative and Other Written Elements Understand Deployment Considerations 15 - Understand Deployment Considerations Understand How Updates and Timing Affect Reporting Differentiate Between Types of Reports 16 - Summarizing the Importance of Data Governance Define Data Governance Understand Access Requirements and Policies Understand Security Requirements Understand Entity Relationship Requirements 17 - Applying Quality Control to Data Describe Characteristics, Rules, and Metrics of Data Quality Identify Reasons to Quality Check Data and Methods of Data Validation 18 - Explaining Master Data Management Concepts Explain the Basics of Master Data Management Describe Master Data Management Processes Additional course details: Nexus Humans CompTIA Data Plus (DA0-001) training program is a workshop that presents an invigorating mix of sessions, lessons, and masterclasses meticulously crafted to propel your learning expedition forward. This immersive bootcamp-style experience boasts interactive lectures, hands-on labs, and collaborative hackathons, all strategically designed to fortify fundamental concepts. Guided by seasoned coaches, each session offers priceless insights and practical skills crucial for honing your expertise. Whether you're stepping into the realm of professional skills or a seasoned professional, this comprehensive course ensures you're equipped with the knowledge and prowess necessary for success. While we feel this is the best course for the CompTIA Data Plus (DA0-001) course and one of our Top 10 we encourage you to read the course outline to make sure it is the right content for you. Additionally, private sessions, closed classes or dedicated events are available both live online and at our training centres in Dublin and London, as well as at your offices anywhere in the UK, Ireland or across EMEA.

Advanced Analytics with Python

By Nexus Human

Duration 3 Days 18 CPD hours This course is intended for Before taking this course delegates should already be familiar with basic analytics techniques, comfortable with basic data manipulation tools such as spreadsheets and databases and already familiar with at least one programming language Overview This course teaches delegates who are already familiar with analytics techniques and at least one programming language how to effectively use the programming language for three tasks: data manipulation and preparation, statistical analysis and advanced analytics (including predictive modelling and segmentation). Mastery of these techniques will allow delegates to immediately add value in their work place by extracting valuable insight from company data to allow better, data-driven decisions. Outcomes: After completing the course, delegates will be capable of writing production-ready R code to perform advanced analytics tasks enabling their organisations make better, data-driven decisions. Becoming a world class data analytics practitioner requires mastery of the most sophisticated data analytics tools. These programming languages are some of the most powerful and flexible tools in the data analytics toolkit. Topic 1 Intro to our chosen language Topic 2 Basic programming conventions Topic 3 Data structures Topic 4 Accessing data Topic 5 Descriptive statistics Topic 6 Data visualisation Topic 7 Statistical analysis Topic 8 Advanced data manipulation Topic 9 Advanced analytics ? predictive modelling Topic 10 Advanced analytics ? segmentation

Seismic Uncertainty Evaluation

By EnergyEdge - Training for a Sustainable Energy Future

About this training The Seismic Uncertainty Evaluation (SUE) course has evolved after a number of years of work experience in the sub-surface domain. A common question closely related to well planning is the quantification and qualification of depth uncertainty and robust estimation of the volumetric ranges, and this course addresses these topics. Training Objectives Upon completion of this course, participants will be able to: Define a structured approach toward seismic depth uncertainty analysis Construct data analytics on seismic products (well logs, velocities, and seismic) Classify advance vertical ray tomography on FWI models to assure a drill ready depth seismic, faults, surfaces, and logs Interpret probabilistic volumetric and automatic spill point control, amplitude conformance closures De-risk the depth uncertainty by providing drilling and completion with a risking score card Target Audience This course is intended for individuals who needs to understand the basic theory and procedures for assessment/ quantification/qualification of all drill-ready products (seismic, faults, horizons, etc.) Geologist Geophysicist Reservoir engineer Drilling engineer Course Level Intermediate Trainer Your expert course leader is a cross-functional Geoscientist and Published Author with 27 years of international experience working in Upstream Petroleum Exploration and Production for Oil and Gas Companies in Australia, India, Singapore, Saudi Arabia, and Oman. During his career he actively supported field development, static & dynamic reservoir modelling & well planning, 3D Seismic data acquisition with Schlumberger & SVUL, 3D seismic data processing with CGG & interpretation, Q.I. and field development with Woodside, Applied Geoscience, and Reliance. POST TRAINING COACHING SUPPORT (OPTIONAL) To further optimise your learning experience from our courses, we also offer individualized 'One to One' coaching support for 2 hours post training. We can help improve your competence in your chosen area of interest, based on your learning needs and available hours. This is a great opportunity to improve your capability and confidence in a particular area of expertise. It will be delivered over a secure video conference call by one of our senior trainers. They will work with you to create a tailor-made coaching program that will help you achieve your goals faster. Request for further information post training support and fees applicable Accreditions And Affliations

SQL for Data Science, Data Analytics and Data Visualization

By NextGen Learning

SQL for Data Science, Data Analytics and Data Visualization Course Overview: This course offers a comprehensive introduction to SQL, designed for those looking to enhance their skills in data science, data analytics, and data visualisation. Learners will develop the ability to work with SQL databases, efficiently query and manage data, and apply these techniques for data analysis in both SQL Server and Azure Data Studio. By mastering SQL statements, aggregation, filtering, and advanced commands, learners will be equipped with the technical skills required to manage large datasets and extract meaningful insights. The course provides a solid foundation in data structures, user management, and working with multiple tables, culminating in the ability to perform complex data analysis and visualisation tasks. Course Description: This course covers a broad range of topics essential for anyone working with data in a professional capacity. From setting up SQL servers to mastering database management tools like SQL Server Management Studio (SSMS) and SQL Azure Data Studio, the course provides a thorough grounding in SQL. Learners will gain expertise in data structure statements, filtering data, and applying aggregate functions, as well as building complex SQL queries for data analysis. The course also includes instruction on SQL user management, group by statements, and JOINs for multi-table analysis. Key topics such as SQL constraints, views, stored procedures, and database backup and restore are also explored. The course incorporates SQL visualisation tools in Azure Data Studio, empowering learners to visualise data effectively. By the end of the course, learners will be proficient in SQL queries, data manipulation, and using Azure for data analysis. SQL for Data Science, Data Analytics and Data Visualization Curriculum: Module 01: Getting Started Module 02: SQL Server Setting Up Module 03: SQL Azure Data Studio Module 04: SQL Database Basic SSMS Module 05: SQL Statements for DATA Module 06: SQL Data Structure Statements Module 07: SQL User Management Module 08: SQL Statement Basic Module 09: Filtering Data Rows Module 10: Aggregate Functions Module 11: SQL Query Statements Module 12: SQL Group By Statement Module 13: JOINS for Multiple Table Data Analysis Module 14: SQL Constraints Module 15: Views Module 16: Advanced SQL Commands Module 17: SQL Stored Procedures Module 18: Azure Data Studio Visualisation Module 19: Azure Studio SQL for Data Analysis Module 20: Import & Export Data Module 21: Backup and Restore Database (See full curriculum) Who is this course for? Individuals seeking to enhance their data management and analysis skills. Professionals aiming to progress in data science, data analytics, or database administration. Beginners with an interest in data analysis and SQL databases. Anyone looking to gain expertise in SQL for Azure and SQL Server environments. Career Path: Data Analyst Data Scientist Database Administrator SQL Developer Business Intelligence Analyst Data Visualisation Specialist

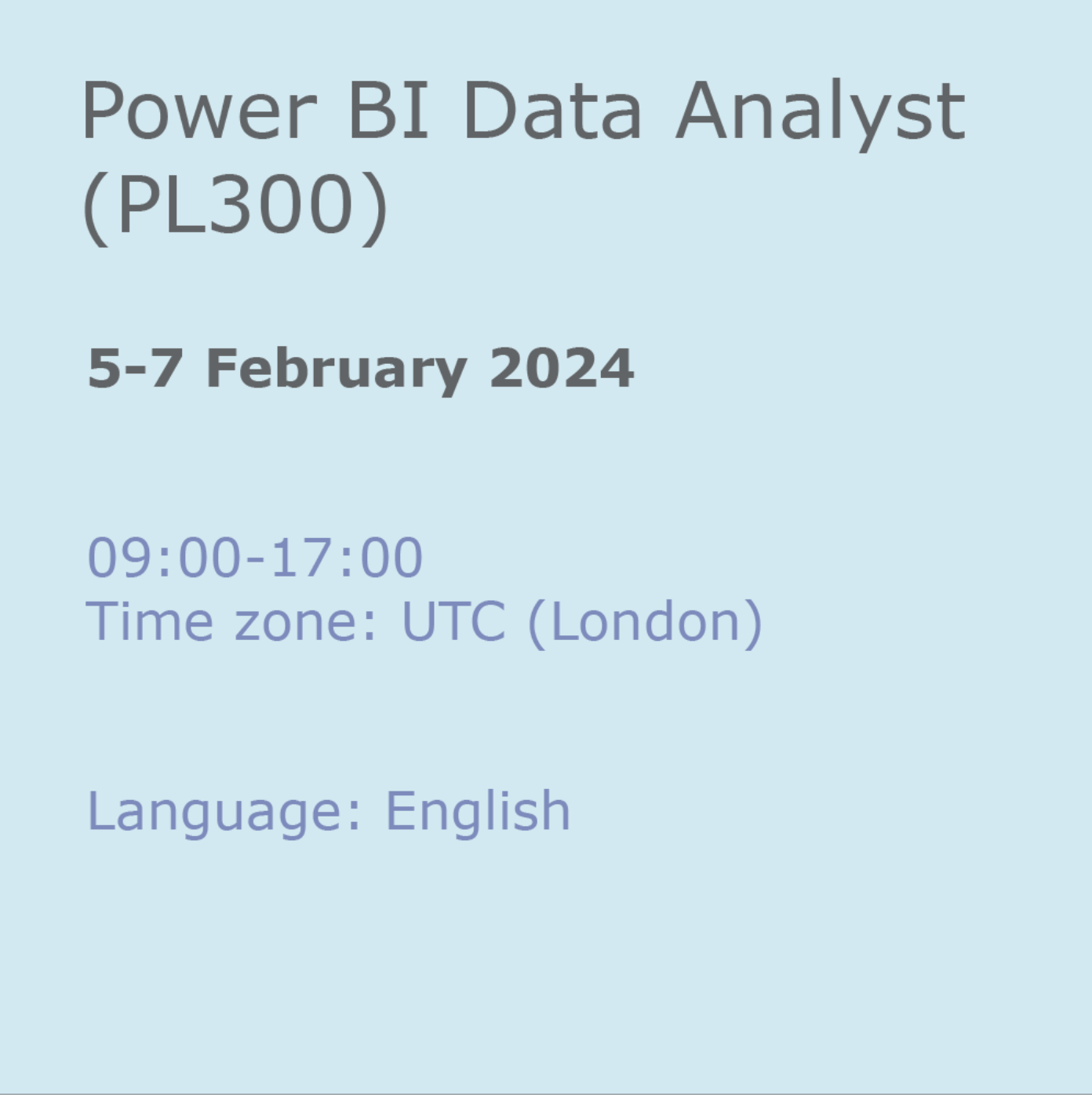

Power BI Data Analyst (PL300)

By Online Productivity Training

OVERVIEW This official Microsoft Power BI training course will teach you how to connect to data from many sources, clean and transform it using Power Query, create a data model consisting of multiple tables connected with relationships and build visualisations and reports to show the patterns in the data. The course will explore formulas created using the DAX language, including the use of advanced date intelligence calculations. Additional visualisation features including interactivity between the elements of a report page are covered as well as parameters and row-level security, which allows a report to be tailored according to who is viewing it. The course will also show how to publish reports and dashboards to a workspace on the Power BI Service. COURSE BENEFITS: Learn how to clean, transform, and load data from many sources Use database queries in Power Query to combine tables using append and merge Create and manage a data model in Power BI consisting of multiple tables connected with relationships Build Measures and other calculations in the DAX language to plot in reports Manage advanced time calculations using date tables Optimise report calculations using the Performance Analyzer Manage and share report assets to the Power BI Service Prepare for the official Microsoft PL-300 exam using Microsoft Official Courseware WHO IS THE COURSE FOR? Data Analysts with little or no experience of Power BI who wish to upgrade their knowledge to include Business Intelligence Management Consultants who need to conduct rapid analysis of their clients’ data to answer specific business questions Analysts who need to upgrade their organisation from a simple Excel or SQL-based management reporting system to a dynamic BI system Data Analysts who wish to develop organisation-wide reporting in the form of web reports or phone apps Marketers in data-intensive organisations who wish to build visually appealing, dynamic charts for their stakeholders to use COURSE OUTLINE Module 1 Getting Started With Microsoft Data Analytics Data analytics and Microsoft Getting Started with Power BI Module 2 Get Data In Power BI Get data from various data sources Optimize performance Resolve data errors Lab: Prepare Data in Power BI Desktop Module 3 Clean, Transform And Load Data In Power BI Data shaping Data profiling Enhance the data structure Lab: Load Data in Power BI Desktop Module 4 Design A Data Model In Power BI Introduction to data modelling Working with Tables Dimensions and Hierarchies Lab: Model Data in Power BI Desktop Module 5 Create Model Calculations Using DAX In Power BI Introduction to DAX Real-time Dashboards Advanced DAX Lab 1: Create DAX Calculations in Power BI Desktop, Part 1 Lab 2: Create DAX Calculations in Power BI Desktop, Part 2 Module 6 Optimize Model Performance Optimize the data model for performance Optimize DirectQuery models Module 7 Create Reports Design a Report Enhance the Report Lab 1: Design a Report in Power BI Desktop, Part 1 Lab 2: Design a Report in Power BI Desktop, Part 2 Module 8 Create Dashboards Create a Dashboard Real-time Dashboards Enhance a Dashboard Lab: Create a Power BI Dashboard Module 9 Perform Advanced Analytics Advanced analytics Data Insights through AI Visuals Lab: Perform Data Analysis in Power BI Desktop Module 10 Create And Manage Workspaces Creating Workspaces Sharing and managing assets Module 11 Manage Datasets In Power BI Parameters Datasets Module 12 Row-Level Security Security in Power BI Lab: Enforce Row-Level Security

Python With Data Science

By Nexus Human

Duration 2 Days 12 CPD hours This course is intended for Audience: Data Scientists, Software Developers, IT Architects, and Technical Managers. Participants should have the general knowledge of statistics and programming Also familiar with Python Overview ? NumPy, pandas, Matplotlib, scikit-learn ? Python REPLs ? Jupyter Notebooks ? Data analytics life-cycle phases ? Data repairing and normalizing ? Data aggregation and grouping ? Data visualization ? Data science algorithms for supervised and unsupervised machine learning Covers theoretical and technical aspects of using Python in Applied Data Science projects and Data Logistics use cases. Python for Data Science ? Using Modules ? Listing Methods in a Module ? Creating Your Own Modules ? List Comprehension ? Dictionary Comprehension ? String Comprehension ? Python 2 vs Python 3 ? Sets (Python 3+) ? Python Idioms ? Python Data Science ?Ecosystem? ? NumPy ? NumPy Arrays ? NumPy Idioms ? pandas ? Data Wrangling with pandas' DataFrame ? SciPy ? Scikit-learn ? SciPy or scikit-learn? ? Matplotlib ? Python vs R ? Python on Apache Spark ? Python Dev Tools and REPLs ? Anaconda ? IPython ? Visual Studio Code ? Jupyter ? Jupyter Basic Commands ? Summary Applied Data Science ? What is Data Science? ? Data Science Ecosystem ? Data Mining vs. Data Science ? Business Analytics vs. Data Science ? Data Science, Machine Learning, AI? ? Who is a Data Scientist? ? Data Science Skill Sets Venn Diagram ? Data Scientists at Work ? Examples of Data Science Projects ? An Example of a Data Product ? Applied Data Science at Google ? Data Science Gotchas ? Summary Data Analytics Life-cycle Phases ? Big Data Analytics Pipeline ? Data Discovery Phase ? Data Harvesting Phase ? Data Priming Phase ? Data Logistics and Data Governance ? Exploratory Data Analysis ? Model Planning Phase ? Model Building Phase ? Communicating the Results ? Production Roll-out ? Summary Repairing and Normalizing Data ? Repairing and Normalizing Data ? Dealing with the Missing Data ? Sample Data Set ? Getting Info on Null Data ? Dropping a Column ? Interpolating Missing Data in pandas ? Replacing the Missing Values with the Mean Value ? Scaling (Normalizing) the Data ? Data Preprocessing with scikit-learn ? Scaling with the scale() Function ? The MinMaxScaler Object ? Summary Descriptive Statistics Computing Features in Python ? Descriptive Statistics ? Non-uniformity of a Probability Distribution ? Using NumPy for Calculating Descriptive Statistics Measures ? Finding Min and Max in NumPy ? Using pandas for Calculating Descriptive Statistics Measures ? Correlation ? Regression and Correlation ? Covariance ? Getting Pairwise Correlation and Covariance Measures ? Finding Min and Max in pandas DataFrame ? Summary Data Aggregation and Grouping ? Data Aggregation and Grouping ? Sample Data Set ? The pandas.core.groupby.SeriesGroupBy Object ? Grouping by Two or More Columns ? Emulating the SQL's WHERE Clause ? The Pivot Tables ? Cross-Tabulation ? Summary Data Visualization with matplotlib ? Data Visualization ? What is matplotlib? ? Getting Started with matplotlib ? The Plotting Window ? The Figure Options ? The matplotlib.pyplot.plot() Function ? The matplotlib.pyplot.bar() Function ? The matplotlib.pyplot.pie () Function ? Subplots ? Using the matplotlib.gridspec.GridSpec Object ? The matplotlib.pyplot.subplot() Function ? Hands-on Exercise ? Figures ? Saving Figures to File ? Visualization with pandas ? Working with matplotlib in Jupyter Notebooks ? Summary Data Science and ML Algorithms in scikit-learn ? Data Science, Machine Learning, AI? ? Types of Machine Learning ? Terminology: Features and Observations ? Continuous and Categorical Features (Variables) ? Terminology: Axis ? The scikit-learn Package ? scikit-learn Estimators ? Models, Estimators, and Predictors ? Common Distance Metrics ? The Euclidean Metric ? The LIBSVM format ? Scaling of the Features ? The Curse of Dimensionality ? Supervised vs Unsupervised Machine Learning ? Supervised Machine Learning Algorithms ? Unsupervised Machine Learning Algorithms ? Choose the Right Algorithm ? Life-cycles of Machine Learning Development ? Data Split for Training and Test Data Sets ? Data Splitting in scikit-learn ? Hands-on Exercise ? Classification Examples ? Classifying with k-Nearest Neighbors (SL) ? k-Nearest Neighbors Algorithm ? k-Nearest Neighbors Algorithm ? The Error Rate ? Hands-on Exercise ? Dimensionality Reduction ? The Advantages of Dimensionality Reduction ? Principal component analysis (PCA) ? Hands-on Exercise ? Data Blending ? Decision Trees (SL) ? Decision Tree Terminology ? Decision Tree Classification in Context of Information Theory ? Information Entropy Defined ? The Shannon Entropy Formula ? The Simplified Decision Tree Algorithm ? Using Decision Trees ? Random Forests ? SVM ? Naive Bayes Classifier (SL) ? Naive Bayesian Probabilistic Model in a Nutshell ? Bayes Formula ? Classification of Documents with Naive Bayes ? Unsupervised Learning Type: Clustering ? Clustering Examples ? k-Means Clustering (UL) ? k-Means Clustering in a Nutshell ? k-Means Characteristics ? Regression Analysis ? Simple Linear Regression Model ? Linear vs Non-Linear Regression ? Linear Regression Illustration ? Major Underlying Assumptions for Regression Analysis ? Least-Squares Method (LSM) ? Locally Weighted Linear Regression ? Regression Models in Excel ? Multiple Regression Analysis ? Logistic Regression ? Regression vs Classification ? Time-Series Analysis ? Decomposing Time-Series ? Summary Lab Exercises Lab 1 - Learning the Lab Environment Lab 2 - Using Jupyter Notebook Lab 3 - Repairing and Normalizing Data Lab 4 - Computing Descriptive Statistics Lab 5 - Data Grouping and Aggregation Lab 6 - Data Visualization with matplotlib Lab 7 - Data Splitting Lab 8 - k-Nearest Neighbors Algorithm Lab 9 - The k-means Algorithm Lab 10 - The Random Forest Algorithm

Gas Lift Design & Optimization using NODAL Analysis

By EnergyEdge - Training for a Sustainable Energy Future

About this training course Gas-lift is one of the predominant forms of artificial lift used for lifting liquids from conventional, unconventional, onshore and offshore assets. Gas-lift and its various forms (intermittent lift, gas-assisted plunger lift) allows life of well lift-possibilities when selected and applied properly. This 5-day training course is designed to give participants a thorough understanding of gas-lift technology and related application concepts. This training course covers main components such as application envelope, relative strengths and weaknesses of gas-lift and its different forms like intermittent lift, gas-assisted plunger lift. Participants solve examples and class problems throughout the course. Animations and videos reinforce the concepts under discussion. Unique Features: Hands-on usage of SNAP Software to solve gas-lift exercises Discussion on digital oil field Machine learning applications in gas-lift optimization Training Objectives After the completion of this training course, participants will be able to: Understand the fundamental theories and procedures related to Gas-Lift operations Easily recognize the different components of the gas-lift system and their basic structural and operational features Be able to design a gas-lift installation Comprehend how digital oilfield tools help address ESP challenges Examine recent advances in real-time approaches to the production monitoring and lift management Target Audience This training course is suitable and will greatly benefit the following specific groups: Production, reservoir, completion, drilling and facilities engineers, analysts, and operators Anyone interested in learning about implications of gas-lift systems for their fields and reservoirs Course Level Intermediate Advanced Training Methods The training instructor relies on a highly interactive training method to enhance the learning process. This method ensures that all participants gain a complete understanding of all the topics covered. The training environment is highly stimulating, challenging, and effective because the participants will learn by case studies which will allow them to apply the material taught in their own organization. Course Duration: 5 days in total (35 hours). Training Schedule 0830 - Registration 0900 - Start of training 1030 - Morning Break 1045 - Training recommences 1230 - Lunch Break 1330 - Training recommences 1515 - Evening break 1530 - Training recommences 1700 - End of Training The maximum number of participants allowed for this training course is 20. This course is also available through our Virtual Instructor Led Training (VILT) format. Prerequisites: Understanding of petroleum production concepts. Each participant needs a laptop/PC for solving class examples using software to be provided during class. Laptop/PC needs to have a current Windows operating system and at least 500 MB free disk space. Participants should have administrator rights to install software. Trainer Your expert course leader has over 35 years' work-experience in multiphase flow, artificial lift, real-time production optimization and software development/management. His current work is focused on a variety of use cases like failure prediction, virtual flow rate determination, wellhead integrity surveillance, corrosion, equipment maintenance, DTS/DAS interpretation. He has worked for national oil companies, majors, independents, and service providers globally. He has multiple patents and has delivered a multitude of industry presentations. Twice selected as an SPE distinguished lecturer, he also volunteers on SPE committees. He holds a Bachelor's and Master's in chemical engineering from the Gujarat University and IIT-Kanpur, India; and a Ph.D. in Petroleum Engineering from the University of Tulsa, USA. Highlighted Work Experience: At Weatherford, consulted with clients as well as directed teams on digital oilfield solutions including LOWIS - a solution that was underneath the production operations of Chevron and Occidental Petroleum across the globe. Worked with and consulted on equipment's like field controllers, VSDs, downhole permanent gauges, multiphase flow meters, fibre optics-based measurements. Shepherded an enterprise-class solution that is being deployed at a major oil and gas producer for production management including artificial lift optimization using real time data and deep-learning data analytics. Developed a workshop on digital oilfield approaches for production engineers. Patents: Principal inventor: 'Smarter Slug Flow Conditioning and Control' Co-inventor: 'Technique for Production Enhancement with Downhole Monitoring of Artificially Lifted Wells' Co-inventor: 'Wellbore real-time monitoring and analysis of fracture contribution' Worldwide Experience in Training / Seminar / Workshop Deliveries: Besides delivering several SPE webinars, ALRDC and SPE trainings globally, he has taught artificial lift at Texas Tech, Missouri S&T, Louisiana State, U of Southern California, and U of Houston. He has conducted seminars, bespoke trainings / workshops globally for practicing professionals: Companies: Basra Oil Company, ConocoPhillips, Chevron, EcoPetrol, Equinor, KOC, ONGC, LukOil, PDO, PDVSA, PEMEX, Petronas, Repsol, , Saudi Aramco, Shell, Sonatrech, QP, Tatneft, YPF, and others. Countries: USA, Algeria, Argentina, Bahrain, Brazil, Canada, China, Croatia, Congo, Ghana, India, Indonesia, Iraq, Kazakhstan, Kenya, Kuwait, Libya, Malaysia, Oman, Mexico, Norway, Qatar, Romania, Russia, Serbia, Saudi Arabia, S Korea, Tanzania, Thailand, Tunisia, Turkmenistan, UAE, Ukraine, Uzbekistan, Venezuela. Virtual training provided for PetroEdge, ALRDC, School of Mines, Repsol, UEP-Pakistan, and others since pandemic. POST TRAINING COACHING SUPPORT (OPTIONAL) To further optimise your learning experience from our courses, we also offer individualized 'One to One' coaching support for 2 hours post training. We can help improve your competence in your chosen area of interest, based on your learning needs and available hours. This is a great opportunity to improve your capability and confidence in a particular area of expertise. It will be delivered over a secure video conference call by one of our senior trainers. They will work with you to create a tailor-made coaching program that will help you achieve your goals faster. Request for further information post training support and fees applicable Accreditions And Affliations

Artificial Lift and Real-Time Production Optimization in Digital Oilfield

By EnergyEdge - Training for a Sustainable Energy Future

About this training course Artificial lift systems are an important part of production operations for the entire lifecycle of an asset. Often, oil and gas wells require artificial lift for most of the life cycle. This 5-day training course offers a thorough treatment of artificial lift techniques including design and operation for production optimization. With the increasing need to optimize dynamic production in highly constrained cost environments, opportunities and issues related to real-time measurements and optimization techniques needs to be discussed and understood. Artificial lift selection and life cycle analysis are covered. These concepts are discussed and reinforced using case studies, quizzing tools, and exercises with software. Participants solve examples and class problems throughout the course. Animations and videos reinforce the concepts under discussion. Understanding of these important production concepts is a must have to exploit the existing assets profitably. Unique Features: Hands-on usage of SNAP Software to solve gas-lift exercises Discussion on digital oil field Machine learning applications in gas-lift optimization Training Objectives After the completion of this training course, participants will be able to: Understand the basics and advanced concepts of each form of artificial lift systems including application envelope, relative strengths, and weaknesses Easily recognize the different components from downhole to the surface and their basic structural and operational features Design and analyze different components using appropriate software tools Understand challenges facing artificial lift applications and the mitigation of these challenges during selection, design, and operation Learn about the role of digital oilfield tools and techniques and their applications in artificial lift and production optimization Learn about use cases of Machine learning and artificial intelligence in the artificial lift Target Audience This training course is suitable and will greatly benefit the following specific groups: Production, reservoir, completion, drilling and facilities engineers, analysts, and operators Anyone interested in learning about selection, design, analysis and optimum operation of artificial lift and related production systems will benefit from this course. Course Level Intermediate Advanced Training Methods The training instructor relies on a highly interactive training method to enhance the learning process. This method ensures that all participants gain a complete understanding of all the topics covered. The training environment is highly stimulating, challenging, and effective because the participants will learn by case studies which will allow them to apply the material taught in their own organization. Course Duration: 5 days in total (35 hours). Training Schedule 0830 - Registration 0900 - Start of training 1030 - Morning Break 1045 - Training recommences 1230 - Lunch Break 1330 - Training recommences 1515 - Evening break 1530 - Training recommences 1700 - End of Training The maximum number of participants allowed for this training course is 20. This course is also available through our Virtual Instructor Led Training (VILT) format. Prerequisites: Understanding of petroleum production concepts. Each participant needs a laptop/PC for solving class examples using software to be provided during class. Laptop/PC needs to have a current Windows operating system and at least 500 MB free disk space. Participants should have administrator rights to install software. Trainer Your expert course leader has over 35 years' work-experience in multiphase flow, artificial lift, real-time production optimization and software development/management. His current work is focused on a variety of use cases like failure prediction, virtual flow rate determination, wellhead integrity surveillance, corrosion, equipment maintenance, DTS/DAS interpretation. He has worked for national oil companies, majors, independents, and service providers globally. He has multiple patents and has delivered a multitude of industry presentations. Twice selected as an SPE distinguished lecturer, he also volunteers on SPE committees. He holds a Bachelor's and Master's in chemical engineering from the Gujarat University and IIT-Kanpur, India; and a Ph.D. in Petroleum Engineering from the University of Tulsa, USA. Highlighted Work Experience: At Weatherford, consulted with clients as well as directed teams on digital oilfield solutions including LOWIS - a solution that was underneath the production operations of Chevron and Occidental Petroleum across the globe. Worked with and consulted on equipment's like field controllers, VSDs, downhole permanent gauges, multiphase flow meters, fibre optics-based measurements. Shepherded an enterprise-class solution that is being deployed at a major oil and gas producer for production management including artificial lift optimization using real time data and deep-learning data analytics. Developed a workshop on digital oilfield approaches for production engineers. Patents: Principal inventor: 'Smarter Slug Flow Conditioning and Control' Co-inventor: 'Technique for Production Enhancement with Downhole Monitoring of Artificially Lifted Wells' Co-inventor: 'Wellbore real-time monitoring and analysis of fracture contribution' Worldwide Experience in Training / Seminar / Workshop Deliveries: Besides delivering several SPE webinars, ALRDC and SPE trainings globally, he has taught artificial lift at Texas Tech, Missouri S&T, Louisiana State, U of Southern California, and U of Houston. He has conducted seminars, bespoke trainings / workshops globally for practicing professionals: Companies: Basra Oil Company, ConocoPhillips, Chevron, EcoPetrol, Equinor, KOC, ONGC, LukOil, PDO, PDVSA, PEMEX, Petronas, Repsol, , Saudi Aramco, Shell, Sonatrech, QP, Tatneft, YPF, and others. Countries: USA, Algeria, Argentina, Bahrain, Brazil, Canada, China, Croatia, Congo, Ghana, India, Indonesia, Iraq, Kazakhstan, Kenya, Kuwait, Libya, Malaysia, Oman, Mexico, Norway, Qatar, Romania, Russia, Serbia, Saudi Arabia, S Korea, Tanzania, Thailand, Tunisia, Turkmenistan, UAE, Ukraine, Uzbekistan, Venezuela. Virtual training provided for PetroEdge, ALRDC, School of Mines, Repsol, UEP-Pakistan, and others since pandemic. POST TRAINING COACHING SUPPORT (OPTIONAL) To further optimise your learning experience from our courses, we also offer individualized 'One to One' coaching support for 2 hours post training. We can help improve your competence in your chosen area of interest, based on your learning needs and available hours. This is a great opportunity to improve your capability and confidence in a particular area of expertise. It will be delivered over a secure video conference call by one of our senior trainers. They will work with you to create a tailor-made coaching program that will help you achieve your goals faster. Request for further information post training support and fees applicable Accreditions And Affliations

his course covers the essential Python Basics, in our interactive, instructor led Live Virtual Classroom. This Python Basics course is a very good introduction to essential fundamental programming concepts using Python as programming language. These concepts are daily used by programmers and is your first step to working as a programmer. By the end, you'll be comfortable in programming Python code. You will have done small projects. This will serve for you as examples and samples that you can use to build larger projects.